This topic is to encourage some discussion about what we feel might be known as the “ideal retrocomputer”.

Clearly there is no simple answer, and everyone will have their own personal thoughts.

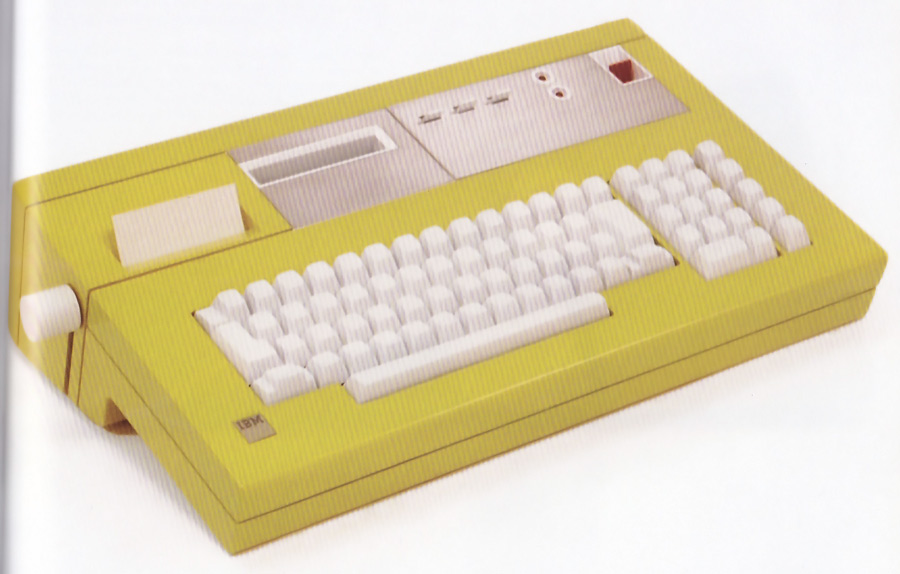

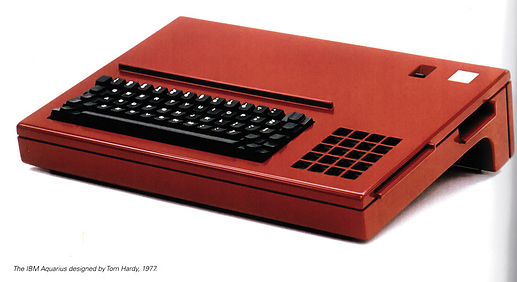

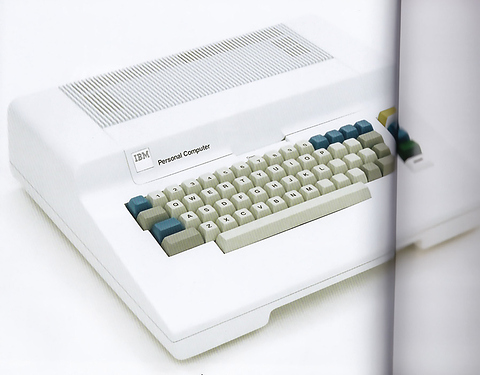

An earlier discussion about memory size, graphics capabilities and operating systems requirements - suggested that the route we have followed since the introduction of the IBM PC in 1981 has been far from ideal, and with a few twists in history, we may have had an entirely different journey and destination over the last 40 years.

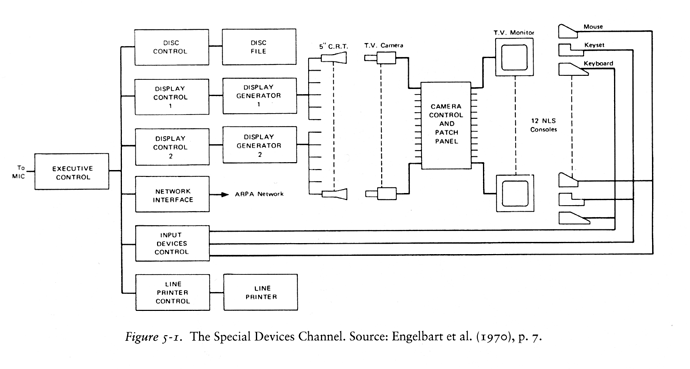

I think the starting point for my historical journey must start with Doug Engelbart’s “Mother of All Demos” from December 1968. Full length video available on youtube.

Engelbart’s work at the Stanford Research Institute, had a strong influence on the work done at Xerox PARC, and the Alto machine they created in order to implement Engelbart’s concepts in reasonable sized (workstation) hardware.

The Xero Alto is well documented on Wikipedia, and to some was considered to be the first personal computer. Applications included engineering tools - including Mead and Conway’s integrated circuit editor, and document editing tools.

Originally was programmed in BCPL but became a strong influence on experimental new software such as Smalltalk-76 and Niklaus Wirth’s accompanying work on the Swiss built, Lilith workstation and Project Oberon in the late 1970s.

All of the elements of advanced GUI based workstations were available by 1980 - approximately 10 years timeframe after Englebart’s nLS demo.

It is documented that Steve Jobs visited Xerox PARC with some Apple Engineers sometime in 1979 and their work on the Lisa and Macintosh was reputed to be heavily influenced by what they saw there.

Contrary to popular belief - work on the Lisa and Macintosh had been underway for several months before the time of their visit.

The Macintosh of 1984 could be argued to be a cost engineered descendent of the Xerox Alto. The introduction of the 68000 in 1979 and cheaper RAM made it possible to gain an order of performance over 8-bit designs, and the ability to support a GUI.

The basic model Alto that had cost $32,000 in 1979, had by 1984 been reduced to a $2495 Macintosh, but it faced stiff competition from the IBM PC and the numerous PC clones that were availabe. Initial Mac sales were hindered by an acute dearth of software titles compared to the by then well established PC.

That concludes my Golden Age of Retrocomputers - the decade from 1969 to 1979 which coincides nicely with the first 10 years of Xerox PARC.