Bob Belleville at Parc proposed a small scale version of the Star, using Intel or Motorola processors, but at the time he proposed it, it would’ve been more expensive than the Star. That changed a couple years later, becoming cheaper. It also would’ve required rewriting everything they were doing for the Star, and removing some features. So, they didn’t go forward with it.

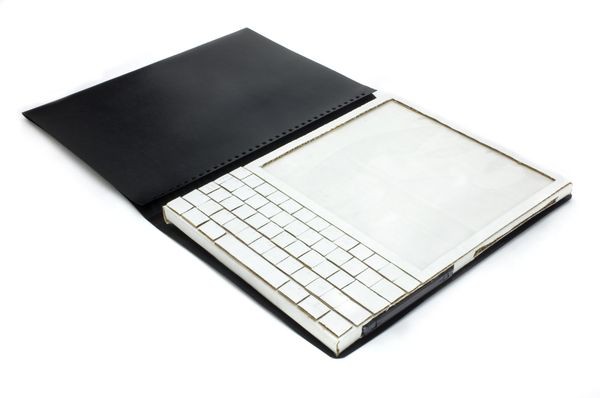

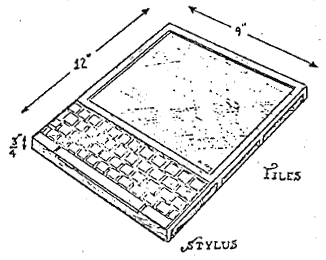

BTW, I think, since Chuck Thacker was working for Microsoft Research, the tablet he held up was a Tablet PC. I never used one of those (I worked with Telxons in the 1990s, sometimes running Windows), but I imagine it wasn’t locked down. So, running Squeak on it without restrictions on code would’ve worked when he talked about that.

Re. Apple and Squeak

I used to track this. Last I heard (many years ago), they allowed Squeak on the iPad, but the only way to share code was by uploading projects to a website, so that others could run the code through a web-emulated version of Squeak (ie. they were not allowed to download code to the iPad).

What I remember is Apple worried that Squeak could be used as a vector for security exploits, since you can access OS functions, and the file system from within Squeak.

Like so many commercial Linux distributions, I imagine apps. run as root on iOS, so all apps. have to be treated like “potential criminals.” As Alan Kay has said for years, the problem should be solved by designing a secure OS architecture, not looking at programming with such suspicion. It’s a good point. I’ve seen this with PCs in schools. School districts lock them down, and make it difficult for programming students to download new languages to them (because the language isn’t “certified” by the district). When I was in school, the 8-bit computers weren’t like this at all. We could run what we wanted on them, without fear of screwing up the system. A good part of that was the OS was in ROM, so it could not be altered by software. Another was we didn’t have hard disks on the school computers, and schools could block writes to their floppies, by simply covering the “write notch” with tape that was difficult to remove (though, this could be circumvented with scissors, but I never saw that). Administration was a lot simpler with this setup, and the risk was a lot lower. I’m not saying go back to that exact setup. I use it as an illustration that it is possible to secure systems without interfering with the ability of users to do programming, if the powers that be want it. Though, it is a matter of how the system is designed, and using currently available system designs may not support current needs. So, new ones would have to be researched.

/https://blogs-images.forbes.com/timothylee/files/2011/07/083Oevi7Gp3RD_327.jpg)