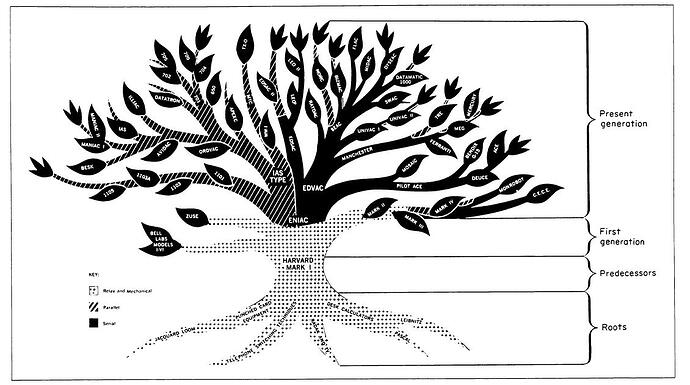

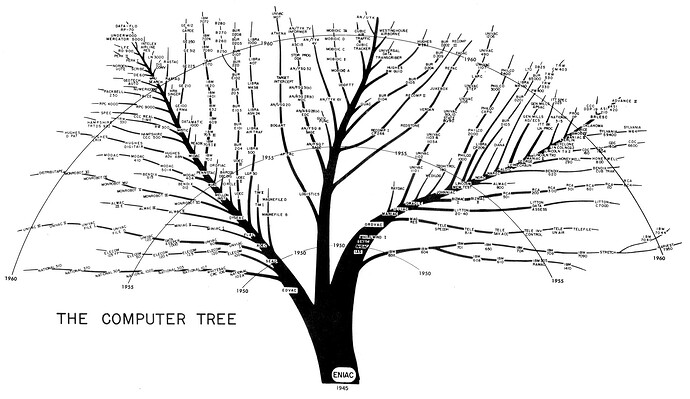

This is how it was when I was in school in the 1980s. I did a couple research papers on the history of computers then, and my sources went from Babbage, or Pascal’s Pascaline, then to Turing, the Bombe, and Colossus, then to ENIAC, EDSAC, Harvard Mark I, maybe some others, and would end with mainframes from IBM in the '60s, and maybe some minicomputers (no manufacturers named) from the '70s. Nothing about Whirlwind, or SAGE, nothing really about DEC, or Remington-Rand. I mean, the closest I’d get to any history of DEC was, ironically, if I’d find something about the history of video games outside of a school project, since they’d talk about Space War, which ran on a PDP-1.

This felt a bit confusing, because I was using interactive computers a lot, with 8- and 16-bit machines. Nothing I read talked about where they came from, except in the computer magazines I used to read avidly, which would say they came from Apple, Atari, Commodore, Radio Shack, etc., as if they invented the concept. Though, at some point I learned about the UK computer market, with Sinclair, Acorn, etc. I used to watch the BBC show The Computer Programme fairly regularly, which often featured the BBC Micro.

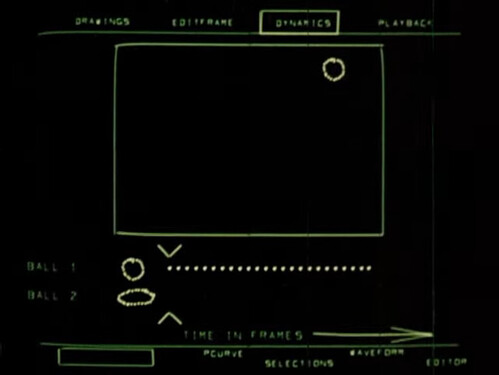

It wasn’t until I was almost out of college in the early '90s that I saw documentaries that focused on the history of what’s now “the main event” in computing, starting with The Machine That Changed The World, and then Cringely’s doc., Triumph of the Nerds, which came out in 1995/96. Both included coverage of Xerox Parc. As I remember, both contained the first coverage I ever saw of Doug Engelbart’s “Mother of all demos,” in 1968. Even so, they didn’t really show how amazing it was, because one of the major points was to demonstrate collaborative computing, where groups could work on a shared knowledge base together, and more than one person could see and speak to each other through teleconference while working on shared documents. The coverage then was all about Engelbart’s invention of the mouse, and how it interacted with a GUI, which is really trivial, by comparison.

Even describing it this way kind of misses the point, because the reason Engelbart created the technology was he wanted it to be used in a process of increasing a kind of “group intelligence,” as a way of hopefully scaling beyond the limits of individual intelligence, to address problems where the complexity is too high for any individual to comprehend, and to address well.

What they also always said was that Engelbart’s invention was great, but that hardly anyone knew who he was. Yet, his technological work, to a significant degree, formed the basis for everything that’s followed. However, how we use it is a far cry from what he intended. This is a common refrain, whenever one looks into the research that was done then.