Well done! A spectacular success!

So how may megs of ram are neeeded run 4 K focal?

You can’t really compare them, just because both were

interactive. BASIC or FOCAL where programable

calculator type problems, with floating point numbers

in the 1970’s, or simple industrial control.

As for FORTH it really had no real direction other than

being threaded code (fig forth) and cheap in the 1980’s

with 8080 (S-100 BUS) and 6502 (APPLE) and easy to control homebrew style devices like a small telecope.

The hardware between 1970 (PDP) and 1980 (APPLE)

can not be compared because of the cost of memory

and that defined what memory space word size possible,

4K PDP 8 16 KB 8008 64K 8080 256KB PDP 11

The other thing is PDP 8 did not lie about memory speed,

You GOT 4k of RANDOM ACCESS memory in PDP 8 time cycle often .75 us. You needed another cycle to write

back memory, Plain and simple timing. With modern cpus

who knows just how SLOW a instruction can be, with modern DRAM being a SERIAL style memory,and pipelining making it a serial cpu.

Ben.

Si-Fi novel quote “Don’t you just love FTL download speeds”.

Ben - the compilation report was as follows:

Sketch uses 22896 bytes (1%) of program storage space. Maximum is 2031616 bytes.

Global variables use 49852 bytes (9%) of dynamic memory, leaving 474436 bytes for local variables. Maximum is 524288 bytes.

The point is, it doesn’t really matter anymore.

The Teensy board is less than $20 and it’s given me a full weekend’s entertainment, and I have learnt a lot about the history and capabilities of the PDP-8 and FOCAL.

Tomorrow, I will reprogram it with another cpu simulation and have a play about with something different.

I have written experimental interpreters that were hand assembled into 300 bytes and cpu simulations that are just 60 lines of C code, but right now I wanted a quick and easy means to implement and experience the PDP-8.

My eyesight is failing and I struggle these days to even wire up 0.1" pitch DIL packages. Having lost my enthusiasm for making real hardware from sctatch, I have had to adapt to other means to still get a buzz from this hobby.

The 6502 and PDP-8 simulations have been fun, and have motivated me to tackle new projects having spent most of the last 6 months in the doldrums.

Nice to see, Teesy has ample memory for small stuff.A

$20 (toy computer) gets you the same as $2,000,000.00 main frame from the 1970’s so that shows how demanding modern software is compared to back then.

Good luck in finding your next project, as interesting machines may not have public software around, or white mice in the area,

Ben.

Ben,

It is phenomenal the computing resources that you can now buy for $20.

Just 10 years ago when I encountered my first Arduino, with a Atmel ATmega328 microcontroller - they were priced at $20. The ATmega has 32K Flash and 2K RAM with a maximum clock frequency of 20MHz.

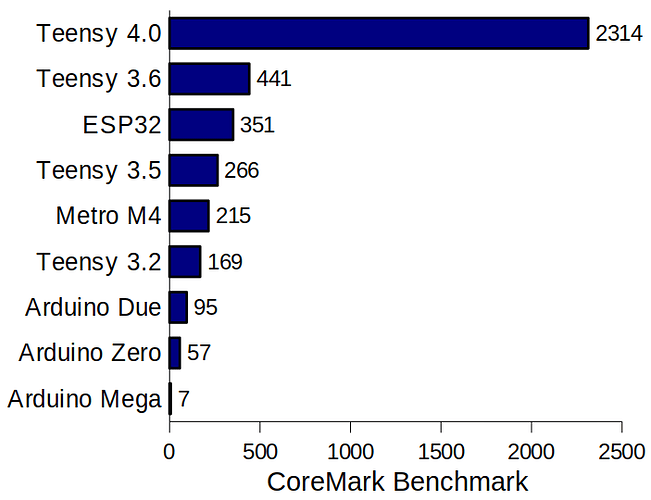

The Teeny 4.0 has 64 times the Flash, 256 times the RAM, 30 times the clock frequency and 330 times the CoreMark performance - according to this benchmark. That’s significant progress in under a decade.

What I like about Teensy, is that it presents a blank slate for code development, without the overhead or encumberance of an OS.

This allows me full freedom to develop the experimental cpu code I choose, keeping the code simple with no overheads other than simple serial input and output routines.

Keeping the cpu and code simple gives me a better chance of ultimately being able to recreate it in TTL devices. My design is a 16-bit with a 4-bit bitslice architecture which I should be able to implement in about 80 simple TTL parts.

The processor is loosely based on Wozniak’s “Sweet-16” 16-bit virtual machine, which consists of an accumulator plus a further 15 general purpose registers. Keeping the operations solely between the accumulator and one other register reduces the complexity of the datapaths.

I have written enough assembly language to have routines for text input and output, numerical entry and output and hex to decimal conversion routines.

The next task is to write a simple monitor, and a tiny Forthlike interpreter which will allow self hosted language development.

The primary takeaway I got from tinkering with FOCAL was how it provided a complete computing environment - even for a machine as resource limited as the PDP-8.

This was no mean achievement, and the credit must go to the smart software engineers at DEC who had massaged a complete floating-point interpreted, interactive, extensible language, complete with line editor into just 4K words of core memory.

If we remember that the PDP-8 was originally based on DTL and used just about 1500 transistors and 10,000 diodes in the logic design. Additionally it used the rather unfamiliar “pulse logic” to achieve setting and clearing of the various flip-flops used in the very few registers present in the PDP-8 design.

I have read somewhere that almost half of the transistors in the PDP-8 were used to handle the addressing, read and write-back logic needed by the core memory.

With FOCAL loaded into core, it totally transforms this minimum architecture into a powerful, personal computer (by 1970’s standards), which when playing Lunar Lander this weekend - reminded me of the early text based, adventure games I encountered when the first micros appeared in school.

So here was a machine - with it’s beginnings clearly embedded in the PDP-5 (1963) which by 1975 was a 19" rack that could be built into medical instrument trolleys, or run a CNC machine tool, typset text for a local newspaper or run the information display systems for the BART (Bay Area Rapid Transport) system.

With an extensive back-catalogue of software and applications behind it, he PDP-8 had achieved a cult following as the world moved to early microprocessors in the mid-1970s. Intersil and Harris shrunk the PDP-8 architecture into a microprocessor format, achieving yet a further generation of integration. The resulting HD6120 found it’s way into several products including DECs range of DECMate intelligent video terminals, which were primarily aimed at data entry and word processing applications.

Bringing things into the 21st Century, I found this interesting site describing an SBC based on the Harris HD6120

A register file is big pain for a TTL computer, because you have a only few chips you can design with: 74170,74172. If you use more modern chips, a design

using 2901’s is a better option. 2901’s are hard to find,

so buy them now if can find them.

10 to 15 MSI chips for a TTL 4 bit bitslice in the data path

is good ballpark figure. Have you looked at the 1802 cpu?

Rather than working on 16 bit cpu, in LS TTL (FPGA development) memory is cheap I have gone to a simple 32

cpu. 2 16 bit alu cards cards and 1 control card. 5 256x4 proms (60ns) are used for control.A 20 bit design has 1 20 alu card in the data path. Both have Excess 3 addition and

512KB of address space. Ballpark timing runs at 1.8432 MHZ. Still tweeking both instruction sets, and debugging software.

The PDP/8 was compact because it could use a 12 bit instruction word. The IBM 1130 also had well thought of instruction set with indexing (core registers 0-3?) that I am

sure helped with FORTHs data/program stacks idea.

Having a register file in core memory was common idea in 1960’s. Then a slow machine was better than NO machine. Ben.

Ben,

Register file chips are as rare as hen’s teeth these days.

Last year I managed to find a couple of tubes of 74F219 - these are a 16 x 4bit RAM with a 10nS access time - similar to the 74xx89 but tristate and non-inverted outputs.

They say that every computer architecture is designed around the requirements of the memory - so my bitslice design is influenced around the 16 registers that these RAM chips provide.

The rest of the design was inspired by Wozniak’s Sweet-16 and the simplicity of the PDP-8.

I don’t want to create a design so complex - that I cannot easily implement it in TTL.

Wishing you well with your 20 bit design,

Ken.

Sadly, the very odd 74172 needed for Brad Rodriguez’s PISC processor is really hard to find. I looked into replacing it with other TTLs but the complexity isn’t worth it. It is better to simply do a different design around another register chip instead.

From the link:

Eight 74172s provide eight 16-bit registers in a three-port register file. This file may simultaneously write one register (“A”), read a second (“B”), and read or write a third (“C”).

Gosh! That is quite the register file. I was going to suggest using (and under-using) an SRAM for a register file, but this is well beyond that.

I got a whole tube of 74LS170’s. I like OC rather than tristate

simple because I have no chip select conflicts.

Unicorn electronics has the 29705 16x4 dual port ram in stock for $8 each. 74172’s are listed as limited stock on hand for $5 each.

Right now I am looking for SD card (standard size) break out cards, but just seem to find the

(micro) SD card adaptors.

The time frame of the design is mid 1970’s, so the older

standard SD cards (16 Meg) are more than ample to emulate the fixed drives at the time.

Ben,

I guess you are in the US, here in the UK there are not many companies that stock the old TTL devices. You find a few on ebay, but they are getting rare.

My proposed design has been heavily influenced and inspired by Sweet-16 - so it is an accumulator plus 15 other general purpose registers.

The ALU design came from Dieter Muller (6502.org) which has been used to great effect in the Gigatron by the late Marcel van Kervinck. I intend to add a universal shift register for left and right shifts, and a 74x85 magnitude comparator to provide the GT LT and EQ flags.

That makes the 4 bitslice ALU 7 chips and the registers 1 chip. The PC will be 1 chip per slice and the control unit 6 or 8 chips in total.

The machine is a 64K address space - but page addressable to 16Mbyte

I think I can do the whole machine in 70 or 80 TTL ICs.

Why 16 meg of memory? Mr Gates claims 640K is ample.

Bank select cpu’s works best in 4 banks, program and data and display and and extra bank.

Since I am not using graphics on machines, I figure 128 Kb

is all I will ever use for 20 bits 96 KB program 32Kb O/s.

1 KB disc block size.

For the larger machine 8/16/32 bits, 128K user and 64K O/S. 2 KB disk block size. I/O is 2400 baud serial and

fictional hard drive of 203 tracks/ 2 heads 2 or 4 K bytes per track. (812 blocks) with a FAT style format.

Some BIOS and Library routines are in ROM, but floating

point still needs to be written.

Hardware 2 SD cards, so I can copy stuff as disk formats

are not standard.

Ben?

Sorry - this reply is definitely wandering off-topic, and would probably be better off under a new title.

Ben,

Agreed 16MB is a lot of memory by some starndards, and as someone who first touched a CP/M machine in 1978 with initially only 16Kbytes it seems an astronomical amount.

However, in the last 42 years we have reached the point where cell phones are regularly fitted with 8GB internal RAM and a microSD card that will take a 128GB card.

But we have to accept that the way we interact with computing devices has changed a lot - from a single user on a terminal working with a CP/M microcomputer - to a teenage kid on a cell phone who wants to have multiple Apps simultaneously in memory, and spend his day flicking between them, sending badly spelt messages punctuated by cryptic emoticons. Not to mention the demands of multiple, mult- megapixel cameras and HD or 4K screens.

Clearly the demands on memory and processor resources have mushroomed by many orders of magnitude, in terms of memory address space, processor transistor count and clock frequency.

I have been laying out pcbs since my college days, my first introduction was on a BBC micro in 1985. That was something of a challenge and it taxed the full resources of that early machine. Five years later we had access to 16 or 25MHz Compaq 80386 machines with perhaps 10MB of RAM and a 40MB hard drive.

That dramatically improved the whole business of laying out multilayer boards - although schematic capture and layout had to be done in separate applications, and communicate with each other using Engineering Change Order files (ECO) that allowed forward and back annotation to be performed and recorded.

Even 30 years ago - I remember this process to be quite productive, and in my opinion pcb design software has not seen the equivalent advances, that other engineering design packages have witnessed.

It is still very much a 2D manual process, and whilst screen refresh and resolutions have increased - it is still very much recognisable as the original 2D drawing engine software with a few somewhat irrelevant new features bolted on. CAD companies have to find something new to add on an annual basis to keep the subscription revenues coming in. In truth there really has not been much innovation in 40 years - somewhat dissapointing when you look at the tens of gigabytes these CAD package take up on the disk.

However, the symbol libraries have grown in size. You seldom have to design your own symbol or component footprint. However - I have twice been bitten by downloading 3rd party symbols that have been complete works of fiction.

To return to the original question about memory. I think 16MB, and a fairly fast 16 or 32 bit processor - say 100MHz clock, would fulfil the requirements of most of my professional requirements, such as PCB design, document editing, spreadsheet etc. Provided there was only a single application in memory at any time, performance would be acceptable.

I despair at my modern laptop that frequently struggles to perform - as a result of running background tasks that are entirely beyond my control.

A complete rethink about operating systems and how we interact with the machine might be in order. The biggest overhead to efficient machine interraction has to be the constant barrage of interruptions from the web, internet browsing and emails from co-workers. All things that were not widely available or commonplace in most companies back in 1990.

Project Oberon is just what you are looking for

The other windows opertating system

I am not sure of the state of the hardware, but $100

for a basic CPU feels right.

As for PCB design I think a text cell based setup is the way to go, even for analog. A 14 pin dip can fit 1 or 2 traces between pins, so why have floating point data for stuff when a smple grid will work, for most things. I remember seeing a text display showing how it could do

all sorts of symbols and PCB text characters ( traces and pads) was shown. I have allways liked the idea text display with overlapping transparent text windows. Layer

1 window text. layer 2 text layer 3 graphics.

Ben.

PS: If this was a email not a pop up box, I could check my spelling and line endings. If I want portable calling, I’ll just

aquire a Shoe Phone, ( “Get Smart” 1965-1970 )

This is actually, why I’m interested in Spacewar (the first known digital video game). Until then, there had been a certain tradition of visual computing at MIT, which was also diffusing to Bell Labs (not the least for the use of DEC equipment), which centred on an intimate dialog between the user and the machine. Watching any of the demo films of the era (e.g., the demo of Sketchpad or “The Incredible Machine”), there’s inevitably a comment like, “The operator is talking to the computer” along with visuals of highly concentrated, focused users. Spacewar! was somewhat heretical in this tradition, as it moved the focus from the human machine interaction to a human to human interaction in realtime on a virtual meeting ground provided by the machine. The machine was now transparent (just implementing the mechanics of a fictional world and facilitating human interaction) and opaque (by the physics of gravity, visually represented by a flickering sun in the center) at the same time. Which was only possible, as the game’s parameters were fine tuned to both “quick motor reactions” and “tactical” considerations, as pointed out by Steve Russell (the main contributor of Spacewar!, also known for the first implementation of Lisp) in Stewart Brand’s “II Electronic Frontiers” (the slightly extended book version of his “Computer Bums” Rolling Stone piece). I think, it’s not just a coincidence that Stewart Brand paralleled Spacewar! and the development of the GUI and Smalltalk at PARC in this narrative. Rather, while not explicitly mentioned in the article or book, it suggests a common strain. Which may be found in this peculiar combination of habitilual, “quick motor reactions” and the more broader, goal oriented “tactical thinking”, as a provision for the apparently stateless GUI, which must allow for flow of work and interruptions (dialogs for choice and confirmation, etc) at the same time.

From this perspective, the problem is rooted in the very “DNA” of visual, interactive computing, as it embraces interruptions and allows them to integrate with what would be else a continuous, focused flow. This could be called “the principle of loose focus”. If this isn’t what we want or deem healthy, we may have to revisit this other tradition of visual computing (which was eventually out-phased by timesharing), as demonstrated by MIT and Bell Labs in the late 1950s and throughout the 1960s.

Watching a demo film on computers, I found the early GUI was better than today, the input and and display were two different items. Input was written rather typed,a novel thing.

I suspect the line drawing hardware was better too, in the

sense a better display gave better detail but the screen size was the same. With bit mapped graphics, more detail changes the screen size, a real pain.

To me, the PDP-8 doesn’t feel like a great fit for Forth since the machine has no good support for stacks. I tried to use the auto increment addressing mode for popping, but that still leaves awkward push operations.

I implemented a subroutine threaded Forth using JMS. Wait, what? That doesn’t support reentrant words, does it? No it doesn’t, but surprisingly most Forth code does very well without it.

There is a Forth for the PDP8:

Very nice! I love to see modern development for these old systems!