I have been working my way through the Virtual VCF West 2020 videos, and was intrigued by the one showing the processes required to operate a PDP-8.

I came away from the video somewhat glad that I had avoided the minicomputer era, and all the encumberances of paper tape and 110 baud ASR 33 Teletypes. Whilst the early PDP-8 machines had a cycle time of 1.5uS and a typical 2 cycle memory access would take 3uS this was clearly not the bottleneck to efficient development on this machine.

The ASR33 Teletype and paper tape system ran at about 10 characters a second, which is OK for typing and printed output, but geologically slow when it came to loading the machine from tape, or repunching a tape having edited some of the code.

So I speculated, what would the PDP-8 computing experience have been like if there had been a Forth available for it? It belongs to the right era - the mid-1960s to mid 1970s, when Charles Moore was developing his Forth to run on various minicomputers.

A Tiny Forth can be written in about 2K or fewer words of assembly language, which would allow a couple of Kwords for user programs. So I did a google search on PDP-8 Forth - and it appears that it has almost been completely ignored, apart from on Github repository from Lars Brinkhoff - who looked at it almost exactly 7 years ago.

His approach was to write a target specific nucleus in the native machine language of the cpu, containing all the necessary primitives and then have a target independent kernel which allows the remainder of the Forth to be compiled.

This approach has been used by other notables in the Forth community, such as C.H. Ting with his eForth.

If the PDP-8 was a best selling computer with some 50,000+ sold - why was it not a popular target for Forth?

My views on this, is that it was in the wrong place at the wrong time. It was a budget machine aimed at education and small engineering or office roles. It’s 16-bit successor the PDP-11 was more likely to be used in the scientific roles (Radio Astronomy) where Forth first took some traction.

Additionally, until about 1975, few people outside the Radio Astronomy community knew anything about Forth, and it wasn’t until August 1980 when Byte Magazine ran their Forth Special Supplement where Forth was publicised to the wider hobbyist community.

By 1980, most hobbyists would be moving away from 15 year old minis and ASR33s and wanting far more compact microprocessor hardware with cassette storage or even a floppy drive.

Additionally, the PDP-8 had a Fortran compiler and a BASIC like language called FOCAL. Perhaps these were deemed sufficiently flexible for the PDP-8s that were used in academia and education.

So I genuiely believe that the poor old PDP-8 was going to miss the boat and remain a Forth spinster for the rest of it’s life.

But were there other reasons why the PDP-8 architecture was not a good fit for Forth? Possibly the subroutine mechanism which made recursion very difficult, or the 12-bit word size that was not a good fit for the usual 16-bit Forth VM model?

The PDP-8 was probably one of the last “octal” programmed minicomputers, and it’s instruction set reflected this - again not a good fit for Forth which was embracing hex notation and ascii character sets.

I have looked for a PDP-8 simulator written in C, and found one by Jean Claud Wippler of JeeLabs.

https://git.jeelabs.org/jcw/embello/src/branch/master/explore/1638-pdp8/p8.c

The simulator is about 250 lines of C code - which seems to be a lot more than I would have expected for such a simple machine. I have written simulators in about 60 lines of code - and the OPC project had several cpus that were simulated in a similar number of lines. A lot of this code relates to loading the memory from a file and for the input and output code. The actual instruction set is handles by four fairly compact switch-case structures.

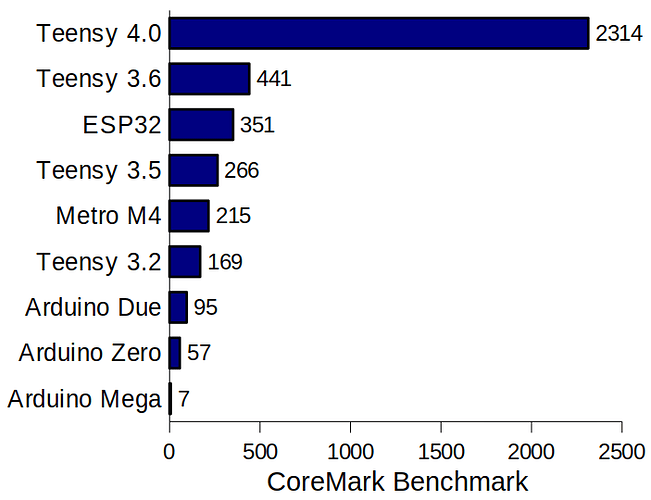

So I feel a new project on the horizon - a compact PDP-8 simulator running on a Teensy - and a Forthlike interpreter to go with it. Should help keep me busy on these winter nights.