I have always liked x + 1 → x better. Any more symbols than that my head explodes, like a /\ b.

I’ve actually no such story, which is a story of it’s own: My school had a voluntary class on “electronic data processing”, but this fell in a peculiar time, when home computers began to replace programmable calculators for the purpose. So, awaiting the arrival of a real computer, we skipped the electronic calculators. (I recall the VIC-20 being presented just before Christmas in class, I think, the Apple II was subject to Reagan era export restrictions, since Austria was/is a neutral country, Tandy didn’t sell here, and PETs weren’t much a thing, I think, you had to import them from Germany – so none of the “trinity” for me.) However, the arrival of that computer (which turned out to be a statically troubled domestic Philips product, which was more often calling for service than operating as intended) was delayed and the class must have been among the last to learn computing on paper. We got the textbook in advance and I stormed ahead and was, probably for aesthetic reasons, caught by the Algol section, with Algol becoming my first language – which I never ran on a real computer. To a certain extent, this division between software logic and the exotism of the hardware this runs on has stayed with me.

In terms of hardware, the school had a huge, board-sized logic trainer (with light bulbs) and also a lone ASR-like teletype keyboard mechanism. I though, the latter must have been the most exotic thing, I’ve ever seen.

(The real trouble with that machine was that, as it failed, it permanently locked in a “call service” mode with this as the sole startup message, which could only be unlocked by said service. Eventually it was found that this must be related to static interference and an antistatic mat was procured to counteract this. However, the class was held by a physics teacher, who, as a proud sign of his occupation, always – as in always – wore a nylon lab coat, which emanated an electrostatic field that began to inflict the machine at a distance of about one and a half meters – and thus easily defeated the antistatic mat. In hindsight, we should have placed the teacher on that mat. Due to this, we got about three short sessions out of that computer in two years.)

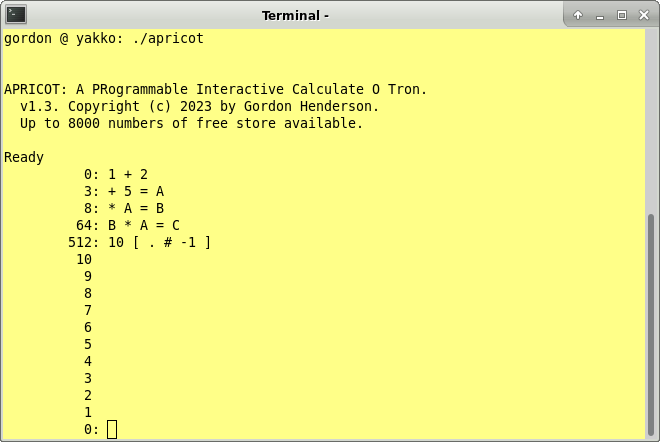

Sounds like you want … APRICOT…

(Things to the right of the colon is what I typed, stuff to the left is what the computer typed and remembered for me).

![]()

-Gordon

Haha, I totally recognize that. At least in BASIC it used to make more sense since you would write

LET x = x + 1

… and that makes it somewhat clear this is an assignment operation.

Not sure if that expression graduated into what we use today as a shorthand, but indeed, algebra wise, looks quite weird.

My first contact with a real computer was with a teletype (probably also an ASR-33) and tic-tac-toe. My father worked at a GE plant and they had an annual open house event. This was probably 1974 or so (when I was 12) and one exhibit was the teletype connected to a remove GE computer playing tic-tac-toe against the visitors. When it was my turn I was given the first move and selected the middle square. The computer played the one to its left. I could hardly believe it - it had lost on its very first move! Who had programmed that thing? Was it on purpose? Anyway, I played to the end and won as expected completely shocking the crowd.

Around the same time I had been impressed with the design of the cameras used in the Viking probes that would land on Mars a couple of years later. They used a pair of mirrors to scan the scene with a single photocell. I thought of using the same idea for a robot, but how to convert the output of the light sensor into a digital signal? I came up with the idea of injecting the photocell’s output into a carbon rod and since I didn’t know about voltages back then I imagined the current would become weaker and weaker as it went along the rod. I would have a motor move along the rod until the current was too weak to keep it moving and at that point a magnetic head would scan a strip with the binary value. There would be a different strip at each possible position (like the tracks on a magnetic drum). Then the motor would be pulled down to the start of the rod so the next pixel could be scanned. At 10 seconds per pixel it would take 9 days per frame to scan at a 320x240 resolution.

I don’t have a specific story, just generally, that (big) computers are smart.

But it reminds me of my post “On error goto”

A couple of first contact types of anecdotes from my extended family:

-

An aunt, who wasn’t technical at all and must have read some popular presentation, propounded the idea, perhaps in the early 1970s, that computers could solve problems very much faster than people, but took ages to program. The remark bothered me - why make such things in that case? (I think I’ve seen a press clipping about the ENIAC or similar which said very much this kind of thing.)

-

A nephew, perhaps around the turn of the millennium, evidently held that common misconception often applied to desktop machines of the PC era, that it was the monitor that was the computer, and the big grey box on the floor was somehow related but not the thing itself.

My first contact was during an ‘O’ Level physics class in either 1978 or '79. Our physics teacher brought in a rectangular wooden box that had a perspex top to it, the perspex had a square cut out of it so you could access the keypad of the KIM1 contained within. Memory and embellishment leads me to want to believe that the box had been lovingly crafted with dovetail joints, although that is unlikely. I was impressed by this device but I don’t recall that we did anything useful with it.

The school was (still is) part of a larger college, leisure and community centre complex in North Manchester and the college had a couple of rooms full of various model Commodore PETs, some of which also had disk drives. We eventually were given access to these and used them for very simple programming. There was also an Apple ][, but you could only touch that if you’d demonstrated some level of skill, and I was probably too overawed by the hardware to develop any real software skill at that time!

Some time ago I was looking for information on the setup at my old school and found mention of my physics teacher, Mr E Purcell, in a copy of the 1982 Commodore Club News. He is listed as running Educational Workshops from the school. I put 2 and 2 together, possibly making an erroneous number, and believe that he probably assembled and built the KIM1 that we were introduced to.

Link to 6502 Org and the archive of Commodore Club News.

I believe, this was the intuitive understanding when it came to hitting the computer, either as an admonishing tap or in real anger. Which poses the the question: How do you hit Azure in the age of flat panels? Computing has become so unrelatable, since… ![]()

My dad taught 8th grade science and one day he discovered that the PTA had bought a computer for the school. The school didn’t know what to do with it, so they had stored it away and my dad found it while doing inventory.

It was a TRS-80 Model I Level I with 8K of RAM. He got the school to pay to upgrade the system to a Level II and 16K.

That summer, he took it home to get some experience using it and that’s when I found it. So that would have been 1979.

No Google. No Stack Overflow. Just the manuals, some type-it-in books and time. But by the time I graduated from High School, I had already “discovered” structured programming (ala Dijkstra).

My problem was how a single transistor acted as an inverter. If you applied ‘1’ to it’s input, it was on, therefore at logic ‘1’, so wasn’t inverting?

It’s only when I considered the volts on input and output it suddenly dawned on me. With 0V applied, the output was ‘1’ - because the output would float high! With a voltage on input, the output would drop to 0V as the transistor pulled the output down. Hence an inverter!

My very first taste of computers (apart from a visit to the

University of Delaware with my 8th-grade math club to see a

DEC PDP-8 (a “straight 8”) in the flesh – not that I had

any idea back then of what the heck it was I was seeing ;-> )

was in the spring of 1969, at my high school in northern

Delaware. It had taken advantage of a Federal grant to equip a room

with some teletype machines and acoustic couplers.

The teletypes provided access to “Conversational Fortran. . .” followed

by a Roman numeral. Long afterward, after years as a computer programmer,

I’d edited this in memory to make the Roman numeral “IV” – because

“Fortran IV” is a thing, right?

It was only within the past decade, in the era of the Web,

that I was able to cobble together evidence (in addition to

the sketchy information in my high-school yearbook) that caused

me to conclude that the system on the other end of that teletype in

the spring of '69 was no less than a Univac 1108 at Computer Sciences

Corporation, who were testing a new timesharing network (“Infonet”) at

the time. And the Univac had a “Conversational Fortran V”. Presumably the

operating system was CSC’s own modified version of Exec II (CSCX)

rather than Exec 8, but I believe the Fortran V language processor

would have been the same in either case.

That taste of high-end timesharing was a luxurious experience, never

to be repeated. The following school year, those same teletype machines

were connected to an overloaded IBM 1130 at the University of Delaware

running BASIC. My fooling around with the Fortran system had been strictly

extracurricular, but I had the misfortune of signing up for the

official BASIC course that fall, and had to do actual course

assignments on the hideously slow system. Talk about bait and

switch! ;->

A paper about such computer-education projects by a scholar at the University

of Delaware comments “[D]uring the summer of 1969 and the following school

year. . . [t]he time-sharing service was provided by an IBM 1130 computer

housed at the University of Delaware and funded jointly by EDTECH and DSAA. . .

After the sophistication of the equipment utilized the previous year,

the three EDTECH high schools were generally dissatisfied with the service. . .”

Or, as a Usenet commenter once noted about a similar service, “I remember

using a multiuser BASIC interpreter that was running remotely on an IBM 1130

at Central High School in Philadelpha in the late 1960’s. It was godawful slow…”

It sure was! It was an agonizing chore to get assignments completed.

The 1130 multiuser BASIC that was inflicted on high-school students may well

be the one mentioned at 1130.org. It’s listed there as presumed lost.

That experience was not unlike my first trip on an airplane. The flight

took place in the early spring of 1976, and I was travelling to Key West from Delaware

(via a Philadelphia airport) to meet a friend for a holiday. Due to the

quirks of airline scheduling I was booked on an almost-empty L1011 widebody.

I had the whole row of seats to myself! “I could get used to this!” I thought.

Well, it’s never ever happened again. The flight back home was like being

crammed onto a crowded bus.

;->

I had something similar at school, in the subject “Computer Studies” which was a new, vocational, course: a CSE course, which was less academic than the usual O level courses. It was new to the teacher, who was also new to teaching, I think. Anyway, I asked the question as to how an inverting logic gate could produce a high signal if all the inputs were low. The teacher had a quick think, and said that it was probably down to the use of capacitors, as they store charge (or voltage.) I remained unconvinced, and not long after realised that it was because logic gates are supplied with power - not shown on logic diagrams, of course.

My first encounter with a computer was when I was about 7 years old, in 1977. My mom and I went to visit her sister in Boston. I don’t know if her sister had a boyfriend, or if he was just someone she knew, but this guy I met on our trip introduced me to a Heathkit computer he’d built. He loaded up a “turtle graphics” program on it, which when I look back on it worked in character mode. The “turtle” was an inverse square that I could move around the screen with arrow keys on the keyboard, and I could press another key to toggle the “pen down” to draw, or “pull the pen up” to not draw. I was entranced! I don’t know how long I was going at it, but it must’ve been an hour. I didn’t want to stop, but we of course had to leave.

On that same trip, we visited the Boston Children’s Museum, and they had what I think was a DEC Vax exhibit (at least some terminals hooked up to one). It gave each kid 5 minutes to play a game. You could pick from a selection of games, as I remember. They were all in text mode. I remember playing hangman on it. The exhibit was swamped; so many kids wanted to play on it. I went through the line over and over again.

I didn’t think about how it worked. I just loved it.

I didn’t get to use a computer again until I was 11. We stopped by our local library, and I saw this middle-aged man doing something on an Atari 400 computer. It wasn’t like what I’d seen before. Something seemed wrong, or broken. I kept seeing him flip between a blue screen with text on it, and a low-rez graphics screen that looked like a race track, with what looked like animal figures sprayed across it in lines. I sat down at a table a fair distance from him, just watching him. I assumed he was an employee of the library, and only people like him could use it. I started getting the idea that he was doing something to make the computer put up this graphics display. It seemed like he was trying to make a horse racing game, but it kept messing up. I thought wow, I’m seeing this guy make something on the computer. Though, he didn’t seem to be successful in making his horse race. I saw him go through the cycle of going back to the text, and then seeing the graphics do exactly the same thing.

I think what would happen was he’d line up a bunch of “horses” on one side of the screen, something would happen, and then all of a sudden, copies of the horse figures were plastered across the screen in lines. It was like he was trying to animate, but didn’t understand you had to do something to slow down the action, and that you had to erase where the horses had been.

Anyway, I was really interested in the fact that this guy was in the process of creating something on this computer. I had never seen that before. I mean, I kinda knew from TV shows that computers were programmed by smart people to do stuff, but I never thought I’d see it happen in front of me.

My mom noticed my interest, and asked me about it. I said I was watching this guy use that computer. She asked if I wanted to use it. I said, “They won’t let me.” I was just sure they wouldn’t let kids on it. I had never seen kids use a computer, other than that visit I made to the Boston Children’s Museum. She insisted I ask the librarian about it. I thought for sure they’d say no, but I asked anyway. I was surprised. The librarian said I could use it if I was 10 years of age or older, and I took a 15-minute orientation. I said okay, I’ll do it! So, I went to an orientation with some other people. We learned about how to operate the Atari, how to load software, how to check out software from behind the desk, and some basics for interacting with the computer. I asked about what that guy I was watching was doing. I was informed that he was programming in a language called Basic. They had the Basic cartridge behind the desk. They also had a tutorial series on learning the language. That was one of the first things I went for, but it was tough. I didn’t find the tutorial that helpful. I ended up reading the manual, which was better. I spent months learning how to program well in Basic, often getting help from people who happened to be hanging around the computer. That’s what got me started.

It was gratifying listening to Ted Kahn’s interview on the Antic podcast, back in 2016.

He talked about how he was part of a group at Atari that was promoting their computers through donations to non-profits. He mentioned they donated to schools, museums, etc. I remembered that the Atari 400 at the library had a badge on it saying it was donated by some foundation, or institute. I know it wasn’t the Atari Institute for Educational Action Research. It had a different name. I can’t remember it now, but I couldn’t help thinking that his work probably had a hand in me getting my hands on a computer I could program (though, once I found out they had an Atari 800 in a different part of the library, with a disk drive, I switched to using that. ![]() I was glad to get away from the 400’s membrane keyboard, and slow tape drive!)

I was glad to get away from the 400’s membrane keyboard, and slow tape drive!)

In a way, X = X + 1 was misleading. I hear mathematicians say that sometimes, that this doesn’t make any sense. However, what’s implied is a time dimension. What you’re really saying is X(t+1) = X(t) + 1, where t is your time factor: X at the next time step equals the value of X now plus 1.

The first BASIC program I ever wrote was typed in and executed on my behalf by my 8th grade teacher’s husband, Olin Campbell, who was a graduate student of Prof. Patrick Suppes at Stanford’s IMSSS. Olin sent back a hardcopy of his TOPS-10 terminal session, which of course started with him logging in. He abbreviated the initial command to LOG OLIN. Knowing nada about OSs and security, I was convinced that this incantation -must- have something to do with logarithms.

I find X <– X + 1 quite compelling, which is also used to describe change of state in older CPU documentation. This use in assignments is also why the left arrow was what is now the underscore in ASCII 1963. (The up arrow, now the caret, was used for calls, I believe.)

Which brings us about back to topic, as these are two beloved symbols on Commodore keyboards (causing much wonder, why these symbols would have been there).

Which is also sort of a first contact story: When I was a kid, at the local bank, handling the account book was a multistep, mostly electro-mechanical procedure (at the front end). As the final step, the account book would be mounted into a special printer (which has a name of its own in German, Sparbuchdrucker) with chain printer like type. And, as a kid, I was really fascinated by this print, especially by as magical characters as the square lozenge (◊, U+25CA, subtotal). Also a character now long out of common use.

When I first learned Basic, the LET command seemed redundant, because I thought, “What’s the difference between saying LET and just using the variable?” Well, it turns out there was a use for it. Basic didn’t allow you to use a variable name that resembled a reserved word (a variable like PRINT, or PRIN), unless you preceded your use of such a variable with LET. You could say something like LET PRINT=1, and Basic would allow it.

I watched a few videos on electronics several years ago, and the guy showed how if you connected transistors in certain configurations, you could get some interesting outputs. I thought, “Hey, these sound like logic gates, NOT, AND, etc.” I later learned the term “TTL logic.”

I knew from many years ago that modern computers used transistors, but it wasn’t until this that I got the idea that constructing logic gates with transistors isn’t that hard.

I knew from many years ago that modern computers used transistors,

but it wasn’t until this that I got the idea that constructing

logic gates with transistors isn’t that hard.

Or indeed (for some value of “hard”), constructing them out of computer-game

elements:

https://retrocomputingforum.com/

t/unintended-use-case/3501

It’s impressive that that guy actually went ahead and implemented

the RV32I instruction set (the RISC-V 32-bit integer-only base

instruction subset). Which in turn allowed a friend of his to

program John Conway’s “Game of Life” cellular automaton (though, granted,

it runs so slowly that it has to be speeded up in the YouTube video).

Coincidentally, I’ve just been re-reading 30-year-old sci-fi from

Australian author Greg Egan. I’m not sure I fully appreciated

this when I first encountered it in the 90s (before I’d ever even

used Virtual PC or VMware or Virtual Box, or knew about SimH or

Hercules, etc., etc.) But I’m certainly getting a kick out of

my re-reads:

“So the processor clusters in Tokyo or Dallas or Seoul were simulating

a cellular automaton containing a lattice of bizarre immaterial computers…

which in turn were simulating the logic (if not the physics) of the

processor clusters themselves. From there on up, everything happened

in exactly the same way as it did on a real machine – only much more slowly.”

– Greg Egan, Permutation City (1994)

Chapter 19

(REMIT NOT PAUCITY)

June 2051

In that chapter, the “Garden of Eden” configuration for the

launch of “Elysium” (the “TVC universe”), being run in simulation

on the “Joint Supercomputer Network”, also contains the uploaded

personalities (“Copies”) of 18 people (one actually “running” and the

others in storage); the code for a second cellular automaton called the

“Autoverse” (together with Maria Deluca’s Autoverse specs for “Planet Lambert”,

along with her design for a simulated microorganism intended to be the seed

for the evolution of life there); plus Malcolm Carter’s code for the

virtual-reality environment “Permutation City”; together with two stowaway

Copies who have been steganographically concealed within the code for the

city.

The $32 million from the 16 billionaire “Copies” to whom Paul Durham

has promised immortality is enough to run the launch for about nine

hours (depending on the fluctuating price of computation on the

“QIPS Exchange”). After the simulation is shut down, according to Durham’s

“dust theory”, the TVC universe will continue to exist (and continue

to grow) orthogonally to the universe from which it was spawned,

organizing itself out of “particles” of space-time that Durham calls “dust”.

Only the Copies of Paul and Maria inside Elysium itself will ever get to know

if the launch was successful, or if Durham’s “dust theory” is true.

Original Maria never believes it – she’s just a freelance programmer

being paid to do a job, and she thinks her employer is a lunatic.

More Permutation City:

++++

"‘There’s a cellular automaton called TVC. After Turing, von Neumann and

Chiang. Chiang completed it around twenty-ten; it’s a souped-up, more

elegant version of von Neumann’s work from the nineteen fifties.’

Maria nodded uncertainly; she’d heard of all this, but it wasn’t her field.

She did know that John von Neumann and his students had developed a two-dimensional

cellular automaton, a simple universe in which you could embed an elaborate

pattern of cells – a rather Lego-like “machine” – which acted as both a

universal constructor and a universal computer. Given the right program – a string

of cells to be interpreted as coded instructions rather than part of the machine –

it could carry out any computation, and build anything at all. Including another

copy of itself – which could build another copy, and so on. Little self-replicating

toy computers could blossom into existence without end. . .

'In two dimensions, the original von Neumann machine had to reach farther

and farther – and wait longer and longer – for each successive bit of data.

In a six-dimensional TVC automaton, you can have a three-dimensional grid

of computers, which keeps on growing indefinitely – each with its own

three-dimensional memory, which can also grow without bound. . . .

The TVC universe is one big, ever-expanding processor cluster.’ . . .

Maria could almost see it: a vast lattice of computers, a seed of order

in a sea of a random noise, extending itself from moment to moment by

sheer force of internal logic, ‘accreting’ the necessary building blocks from

the chaos of non-space-time by the very act of defining space and time.

Visualizing wasn’t believing, though. . .

She said, ‘So you promised to fit a snapshot of each of your “backers” into

the Garden-of-Eden configuration, plus the software to run them on the TVC?’

Durham said proudly, 'All that and more. The major world libraries; not quite

the full holdings, but tens of millions of files-text, audio, visual, interactive –

on every conceivable subject. Databases too numerous to list – including all

the mapped genomes. Software: expert systems, knowledge miners, metaprogrammers.

Thousands of off-the-shelf VR environments: deserts, jungles, coral reefs,

Mars and the moon. And I’ve commissioned Malcolm Carter, no less, to create

a major city to act as a central meeting place: Permutation City, capital

of the TVC universe.

‘And, of course, there’ll be your contribution: the seed for an alien world. . .’

Maria’s skin crawled. Durham’s logic was impeccable; an endlessly expanding

TVC universe, with new computing power being manufactured out of nothing in

all directions, ‘would’ eventually be big enough to run an Autoverse planet –

or even a whole planetary system. The packed version of Planet Lambert –

the compressed description, with its topographic summaries in place of actual

mountains and rivers – would easily fit into the memory of a real-world computer.

Then Durham’s Copy could simply wait for the TVC grid to be big enough –

or pause himself, to avoid waiting – and have the whole thing unfold. . .

Maria arrived at the north Sydney flat around half past twelve. Two terminals

were set up side by side on Durham’s kitchen table. . .

Following a long cellular automaton tradition, the program which would bootstrap

the TVC universe into existence was called FIAT. Durham hit a key, and a

starburst icon appeared on both of their screens.

He turned to Maria. ‘You do the honors.’ . . .

She prodded the icon; it exploded like a cheap flashy firework, leaving a pincushion

of red and green trails glowing on the screen.

‘Very tacky.’

Durham grinned. ‘I thought you’d like it.’

The decorative flourish faded, and a shimmering blue-white cube appeared: a representation

of the TVC universe. The Garden-of-Eden state had contained a billion ready-made processors,

a thousand along each edge of the cube – but that precise census was already out of date.

Maria could just make out the individual machines, like tiny crystals; each speck comprised

sixty million automaton cells – not counting the memory array, which stretched into

the three extra dimensions, hidden in this view. The data preloaded into most of the

processors was measured in terabytes: scan files, libraries, databases; the seed for

Planet Lambert – and its sun, and its three barren sibling planets. Everything had

been assembled, if not on one physical computer – the TVC automaton was probably spread

over fifteen or twenty processor clusters – at least as one logical whole. One pattern. . .

She just couldn’t bear the thought that he harbored the faintest hope that he’d persuaded

her to take the ‘dust hypothesis’ seriously. . .

At three minutes past ten, the money ran out – all but enough to pay for the final

tidying-up. The TVC automaton was shut down between clock ticks; the processors

and memory which had been allocated to the massive simulation were freed for other

users – the memory, as always, wiped to uniform zeroes first for the sake of security.

The whole elaborate structure was dissolved in a matter of nanoseconds. . .

The individual components of the Garden of Eden were still held in mass storage.

Maria deleted her scan file, carefully checking the audit records to be sure that

the data hadn’t been read more often than it should have been. The numbers checked out;

that was no guarantee, but it was reassuring.

Durham deleted everything else. . ."

++++

;->