According to the book, where everything I can’t find anything about and eventually go to find out on my own eventually turns out to have been written already (even, if I failed to identify it in the first place), “Programming the PET/CBM” by Raeto West, the Apple II Basic is mostly identical in its arithmetic parts.

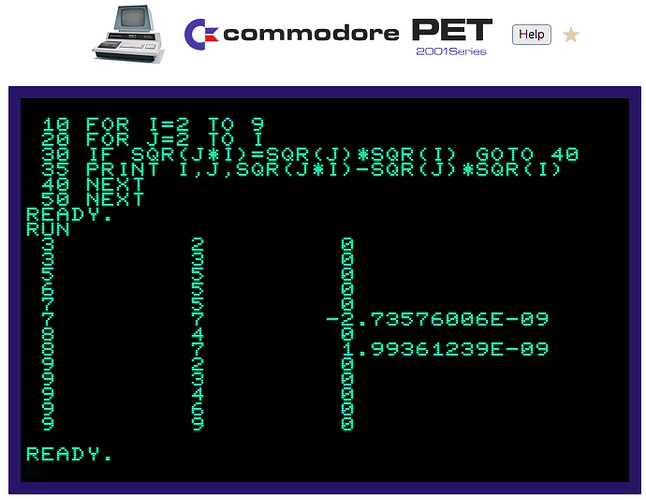

Regarding whether this ever got fixed, it’s the same with Commodore BASIC 4.0 (the one that the VIC and C64 never got).

The next big iteration was BASIC 7.0, found on the C128 in C128 mode, which in turn is based on BASIC 3.5 found on the C16/116 and the Plus/4. (BASIC 3.5, in turn, is a descendant of BASIC 2.0, skipping the 4.0 iteration of the later PETs. — This complex family saga may be worth a TV series.) This is said to have some bug fixes. But, as I have no experience with any of this, I just don’t know.

Then, there’s the question of whether this is a bug or not. The routine is designed to work on FAC#1 and this alone. Keeping both results in the two FACs is a sensible shortcut, compared to the alternative of shuffling those 12 bits around in order to “zeroise” the second result. As far as I can see, this is the only edge case, where this would matter. Is it worth to slow down all other operations for this?

(The machines running BASIC 3.5 and BASIC 7.0 run all at 1.67 or 2MHz, so it may have been worth fixing there, as the resulting overhead doesn’t matter that much.)

Having said that, from a functional perspective, this is, of course, a nasty bug. But this is MS and not, say, HP, and their perspective on this may have been different. There were trade-offs to be made, all the time, and business-aspects certainly were a major argument.

What I could think of would be having a list of all the relevant ROM routine entry points and tracing those with their tag name (common label) and a conventional CPU trace. Having relevant context (like the FAC involved, or filename buffers and the like) listed along this would be a nice-to-have. This should reduce the log to about 1% of a complete CPU trace (these are larger blocks and we’re skipping all the loops) and carry all information for following the path, the ROM code takes. But this involves quite an effort for what is probably of limited interest, especially, as there aren’t any (detailed) lists of such labels readily available. It may be interesting for a C64 emulation, though, where there is just a single ROM version and lots of people toying with this.