This story has amazed me too when I first heard about it a few years ago. It left me with a bitter taste as well.

I have written an article (it’s in greek though …) about Stafford Beer and his endeavours in Chile helping President Allende. Which evolved to comparing Central Planning to the theories of Hayek about Information overload and von Mises’ polemic to socialist society.

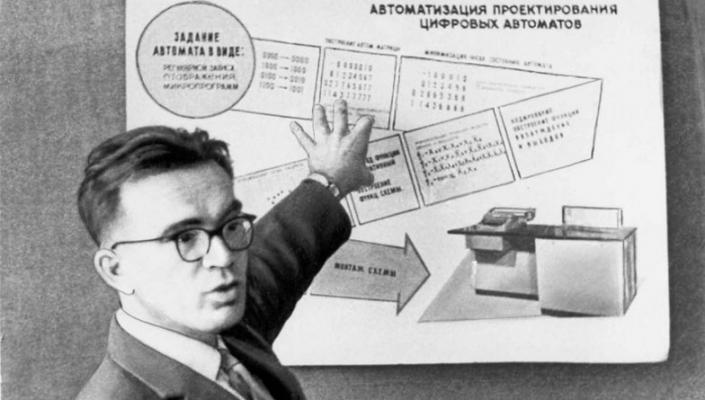

Linear Programming was invented and used in the USSR first, by Kantorovich as an early effort towards scientific socialism. Unfortunately it was not matched by compuing hardware research in USSR (as much).

Stafford Beer had also proposed the “algidometer” (algos=pain in greek – notice: not “pleasuremeter”) which was going to be supplied to each household as a massive feedback device. Today there are tens of social media which extract feedback via sentiment analysis of users’ posts.

For me, the technical challenges of Central Planning can be met with today’s hardware. Naively put, the use of every screw, cog, capacitor etc. in the economy must be first identified (via digital blueprints) and then automatically decide how much and when to produce and where to deliver. Software must do that. I argue that a naive linear system of very sparse equations describing this can be solved with current state-of-the-art. As an indication: in this post some argue the limit in 2012 was 10^5-10^8 variables:stackoverflow. Of course 10^5 is too little but 10^8 is getting there. With tricks and more memory it can be pushed a few orders of magnitude further.

More difficult than creating hardware and software to solve this, it is to decide whether it is on the interests of the society.

For: eliminate waste by more efficient production.

Against: the very word “central”, will it stall individual’s creativity? Will a ZX-Spectrum or Altair be ever produced in such a system?

I believe it wont stall and inventions will continue: Money is a vulgar incentive as a means of pushing the state-of-the-art or inventing new things. It’s prevalent today (though it wasn’t always: remember Laurel as a prize in ancient olympic games) Altough most researchers work at universities for relatively little and very few of them capitalise their work with patents.

Sorry, my post quickly becomes or can become Political but my incentive is a society where I have free time to do things I love while the tedious parts are handled by the computer and the robot, and there is no middle-man to suck a percentage.

Thanks for reminding us Stafford Beer.