An interesting little adventure prompted by a post on Huntrods’ Blog:

Z80, Taylor Series for sin(x) and why limits matter

What’s reported here is that accuracy of sin(x) computed with Taylor’s series (the usual power series, I suppose: odd powers of the angle divided by the odd factorials) falls off beyond pi.

That observation goes against my understanding, which is that these power series converge for all arguments. I suspected that what’s happening is that larger arguments cause some of the terms to get quite large, which in any given precision of arithmetic causes some loss of the small parts of those terms. And yet, as alternate terms are mostly cancelling in these cases, the small parts are going to wind up quite important in the final result.

I tested a small program using RISC OS PICO on a Raspberry Pi - helpfully, there are two versions of BBC Basic on this platform, one with 5 byte floats and the other with 8 byte floats. I expected the 8 byte version to do somewhat better for problematic arguments, which would indicate that it’s not the power series that’s unreliable, it’s just the limitations of the usual floating point arithmetic. You need more precision for the calculations than you wanted in the results, if you’re going to evaluation on large arguments.

(The usual practice is to reduce the arguments to a nice small number, by subtracting multiplies of 2pi, then multiples of pi/2 with adjustments to the argument, and then perhaps halving the argument repeatedly and making use of the half-angle formula to reconstruct the final result. So, never evaluate the power series on large arguments!)

One thing I wanted to do was see how large the largest term would be.

Here’s my code. You’ll see I’ve used meaningful variable names throughout, and lots of helpful comments:

100 REM EXPLORE POWER SERIES FOR SIN

110 REM TEST CASES SIN OF 7, 11, AND 22

120 REM TRY 5 AND 8 BYTE FLOATS

130 REM USING 5 BYTE FLOATS:

140 REM SIN(6.2831853), 1 SIG FIG

150 REM SIN(7), 7 SIG FIG

160 REM SIN(11), 4 SIG FIG

170 REM SIN(22), 0 SIG FIG

180 REM USING 8 BYTE FLOATS:

190 REM SIN(6.2831853), 6 SIG FIG

200 REM SIN(7), 14 SIG FIG

210 REM SIN(11), 12 SIG FIG

220 REM SIN(22), 6 SIG FIG

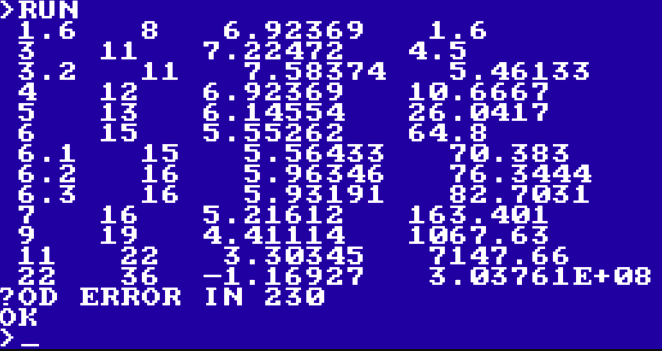

230 A=6.2831853

240 PRINT "CALCULATING SIN OF ";A

250 T=A

260 M=ABS(T)

270 A=A*A

280 S=T

290 F=-1

300 N=2

310 REPEAT

320 T=T*A/N/(N+1)

330 N=N+2

340 PRINT T

350 IF ABS(T)>M THEN M=ABS(T)

360 P=S

370 S=S+F*T

380 F=-F

390 UNTIL S=P

400 PRINT "SIN IS ";S

410 PRINT "MAX TERM WAS ";M

It’s interesting that the blogger found that single vs double precision wasn’t making a difference - that’s something I don’t yet understand.