Greetings Gentlemen,

I have a little free time on my hands again, and I was looking at my retro bookshelf. .

On my shelf I have Tannenbaum’s OS Design and Implementation (which I’ve studied), Comer’s XINUX book (which I’ve skimmed), and some familiar suporting literature, like Bach’s “Design of the Unix Operating System” and Plauger’s “The Standard C Library.”

I was pondering how this approach to OS design depends on preexisting support for C (in the form of ACK and libraries for MINIX, e.g.) and results in operating systems that are, in a kind of spiritual way, just big complicated C interpreters.

In Plauger, for example, when you trace a call down to the bare hardware, you eventually get to a point - the point that makes everything work or not work - where he says, more or less, “you have to write this part in assembly language. Either copy one that works from an existing system, or do it from scratch: good luck!” And that’s it. Tannenbaum includes the assembly code, with a bit of commentary, but doesn’t tell you how to do it yourself. Comer dodges the whole thing by using BIOS calls for everything (at least in the PC version I have).

I mentioned in my older post how, while appreciating its importance and utility for real world systems, I find a lot of abstraction really irritating when I’m trying to learn how things work. It occurred to me that studying OS design based on C is probably a bad idea in the first place, since the whole “black box” virtual machine is the entire point, and one of the reasons for C’s wide historical success.

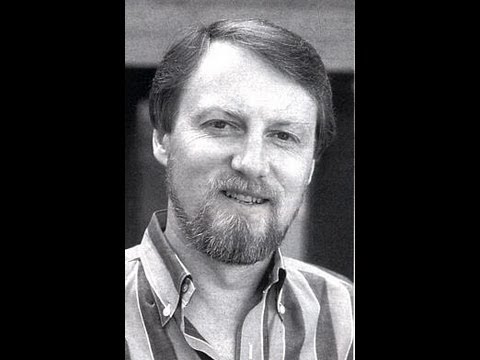

So, suppose it’s the the late 70s / early 80s and I want to learn how to write an OS for my CoCo. What textbook do I buy? Is there such a textbook? Did Gary Kildall invent all the techniques he used to write CP/M (if so: WOW!) or was there an OS:DI equivalent for 8-bit and 16-bit systems?

![The Man Who COULD Have Been Bill Gates [Gary Kildall]](https://img.youtube.com/vi/sDIK-C6dGks/maxresdefault.jpg)