It’s cooled off due to other distractions, but past few weeks I’ve been getting the editor from the Software Tools in Pascal book typed in to try and get it to work with Turbo Pascal 3.01 on a Z80-CP/M simulator.

The editor in that book is very similar in flavor to the Unix ed editor, which is a line editor.

I’m doing this because I want a better editor for CP/M than the ones I’ve tried. Out of the box ED is just absolutely terrible. Command line character editors are awful, at least for me, especially today. Line editors are much better (ed is a line editor). Turbo Pascals editor is nice – for Turbo Pascal. It’s quite clunky and fiddly to use just as an editor. You have to jump through a bunch of commands and screens just to get in and out if you’re not using the compiler.

I tried VEDIT, and it’s a TECO clone. Easy enough to arrow around and add characters and what not, but as soon as you want to go beyond that, you’re dumped head first in to their TECOish macro language. And I, honestly, don’t wish that on anybody. Back to command line character editing. I’d rather retype a line than move a blind pointer 10 characters over to make a change.

Lots of folks use Wordstar, but that’s pretty darn heavy for just a text editor.

I keyed most of the files in using ed on Unix so as to get used to the commands and flow. Using ed to enter the source code hasn’t been painless, but it’s not bad either. And since I’m writing my own editor, I can make tweaks if I see fit (which I will soon anyway since the pattern syntax in this editor isn’t quite the same as regex is in ed).

it’s quite the little project. The book has all the code organized in to several small files. And even the way it’s structured as a Pascal program is interesting. In Pascal, you can nest procedures. Historically, myself, I’ve rarely done that. Typically it was done for little helper functions like for recursive routines, things like that.

But the editor is, when all is said and done, essentially one, very large procedure with several nested utility routines, and very few global variables.

This is all well and good, especially when you leverage #include files to manage the individual routines.

But while TP does support including routines, it does not support nesting them. So I can’t trivially convert the #include statements from the raw source in to the equivalent TP directive.

So, after I copied all of the files to a “diskette” for CP/M, I wrote my own mini-preprocessor to handle the #include myself. It’s straight forward, but since it nests, it’s also recursive (at least it’s more easily done with recursive calls). Once you run the little pre-processor you end up with a file that’s too big for TP to load. It seems to make a valiant try to compile, but as soon as you get an error (and TP stops on the first error), you get a line number to a file that the editor can’t read. So you don’t know what it is. Which makes the turn around kind of a pain.

But while writing the pre-processor, here’s where I ran in to an interesting limitation with TP.

First, by default, TP does not generate code that can be used recursively. I have not disassembled any of it, but I imagine its using a lot of static areas for local variables and what not rather than stack frames. That’s ok, because there’s directives to selectively enable and disable support for recursive code.

However, one caveat is that when you do use recursive calls, you can not use the var clause in the routine parameters.

Quick refresh:

function thing(a : integer); begin ...; end;

function thing(var a : integer); begin ...; end;

In the first instance, the a parameter is passed by value. In the second, the var keyword tells it to pass by reference, and that means that the value of a can be changed within the routine and it will change the underlying variable. Version 1 passes the value of a, version 2 passes a pointer to a.

So, for some reason because of how it manages memory, you can not pass the pointer to local variables to recursive routines. Fine.

Next, Pascal has the TEXT type, which is, essentially, a FILE of CHAR. TEXT is a file reference. In C, it would be akin to FILE *aFile, using stdio.

Turbo specifically disallows passing a TEXT (or any kind of FILE variable) to a routine by value. You have to use the var construct. (There are lots of sensible reasons for this.)

To wit, perhaps, you can see my conundrum.

Initially, I had something like:

procedure process_include(var in_file : text, var out_file : text);

You can perhaps visualize that this reads in_file, scans each line, and writes it to out_file, If it finds a #include line, it simply opens up the file on the line, and calls process_include again.

procedure process_include(var in_file : text, var out_file : text);

var

work_file : text;

begin

...

if (starts_with(line, '#include')) then begin

assign(work_file, extract_filename(line));

reset(work_file);

process_include(work_file, out_file);

end;

...

And…that can’t work. You can’t pass the work_file without a var, and you can’t pass a var to a recursive routine.

So, I had to do my own stack to handle the files. In hindsight I might have been able to make it work with direct use of pointers and dynamic memory. But, no matter. It works.

I also wrote a simple more utility. CP/M was designed back in a day when you could page through files using ^S/^Q to stop and restart the screen, and ^C to stop because the slow terminals were, well, slow. So it didn’t really need a more utility. But on modern hardware, it’s kind of necessary.

By this time, though, I’ve now run in to another thing. By default, the CP/M diskettes I’m using are limited to 64 files in the directory. And I’ve been bouncing off that limit. Very exciting when you bump in to that trying to save work from TP – you’re essentially doomed at that point, because I can’t swap out a diskette at this point using the simulator, and even on a real machine, you can’t swap out the diskette on the fly because CP/M requires a warm restart everytime you swap a floppy – something that you can’t do in TP. So, when that happens, you lose work. Thankfully, I was using a modern host and modern terminal, so I just selected the text page by page that I wanted to keep and copy/pasted it to a safe place while I quit TP. (Oh, and pasting it back in to TP? Not recommended. Not pretty.)

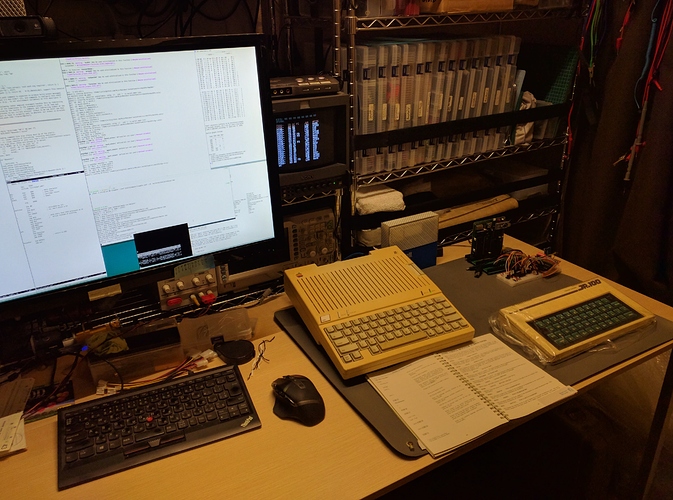

This sent me down the rabbit hole of making “diskette management” easier for the z80pack simulator I’m using, because it’s, honestly, a bit of a pain the way they do it now. If this were a “real” computer, then, yea, I’d just be swapping floppies, formatting new ones, PIPing back and forth. But on the tools I’m using from the command line, it’s awkward and a bit painful.

So, I need something a little bit higher level to manage those.

Now, z80pack out of the box comes with 4 floppies and a hard drive. I could just use the hard drive, but out of the box CP/M is pretty awful with a hard drive. No directories, the USER spaces are kind of terrible. It’s, at least with 2.2, really more of a diskette OS, so I’m trying to stay with the diskette idiom. Creating work floppies, selectively putting utilities on them, etc.

And as currently set up, it’s really a bit of headache keeping it all straight.

So, I’m working on the meta-problem of operating my CP/M “computer” a bit easier.

)

)