Indeed. And I can think of two good reasons for going this route:

First, adding a video display to a machine, as opposed to using a UART chip (or even just bit-banged serial I/O) adds considerable complexity. Learning about how video signals work is great fun and not really much more complex than learning about how to put together a CPU and RAM and so on, but it’s a rather different area that requires its own set of knowledge and expertise.

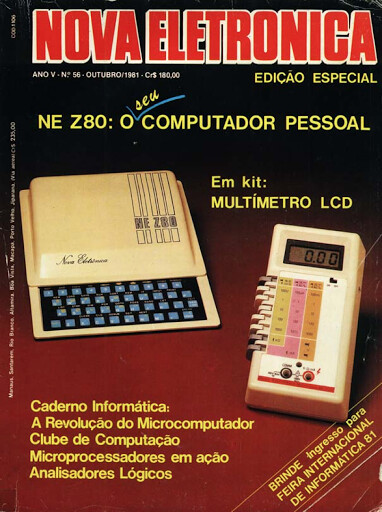

Second, this is actually the more “retro” way of using microcomputers. The rise of microcomputers with video systems around 1976-77 was most directly due to economic factors: video terminals generally cost well over $1000, easily doubling the price of a simple computer system. (Even used printing terminals started at $400-$600.) Homebrew “TV typewriters,” using a standard television as a display, were the first attempt to mitigate this expense; the Apple 1 was in fact a TV typewriter circuit that Woz had previously built glued on to a processor and some memory and I/O. This eventually led to integrated video circuitry being a standard feature (even on systems that provided a monitor, such as the PET or TRS-80 Model I; the latter actually used a re-badged black and white TV) because of its advantages in cost; that falling RAM prices made them easily capable of more sophisticated memory-mapped displays was basically just an added bonus.

I’d recommend building, or at least fully understanding, a simple 8-bit single-board computer (SBC); I’ve found that there are many things that go on in hardware design (even at a high level, such as system address decoding) that will really illuminate how machines and their instruction sets work in a way that you never really see from the software side. (I used to see designs with a lot of “wasted” space for I/O in memory maps and think, “What a waste of space”; now I see ones without that and think, “What a waste of logic gates.”)

There’s no need for soldering to do this; with reasonable care (and sticking to low clock speeds) you can build such a system on a breadboard. (But use high-quality breadboards to avoid pain!) Ben eater has an excellent series of videos on doing this; merely watching them will probably be immensely educational. Nor do you need to use that system for all future projects; doing your initial programming for such a system and upgrading to something more sophisticated (such as a more capable pre-built SBC or an Amiga, the latter of which already hides a lot more complexity than is hidden by chips like a 6502 and its peripherals) when you find your programs are getting too big or complex for your little 8-bit system.

That said, the From Nand To Tetris approach is certainly quite valid, too, and probably as good if you want to slightly gloss over some of the hardware details (though it also does get more deeply into other hardware details, such as CPU design). It may be as much a matter of preference and previous experience as anything else; I not only really like playing with “real” hardware, but came to this with a strong theoretical background already so that the actual “hands on” experience with hardware was what I was missing.

Yes, this is quite a good idea; it’s portable, self-contained with a decent keyboard and display, has a serial port that makes loading/saving and cross-development easy, and detailed technical documentation is easily available for both the hardware and software. I’m not particularly fond of the 8080 CPU architecture (the 6800 and 6502 are both easier to learn and use, IMHO), but that could be as much my bias as reality. (I may also be biased in my liking for these machines; I owned and very much enjoyed a Model 100 back in the '80s, enough that I have recently bought one again, as well as a NEC PC-8201.)

And now some verging-on-off-topic notes on other comments here. If you want to get into the details of any of this, it may be better to branch off into a new thread for it.

The PDP-11 never had segmentation in the way the 8086 did; it was a 16-bit machine with a 16-bit address space (not at all unreasonable for the time) which later used a few bank-switching-like tricks to expand that a bit. The architecture was never intended to support address spaces larger than 64 KB, as the 8086 was.

For the 8086, it’s clear that one of the primary design criteria was to easily be able to replace the 8080 (or, to a lesser degree, the Z80) in embedded systems. If you had an 8080-based board and software with decent (and fairly typical) design, but with memory use bursting at the seams, it required minimal and fairly easy design changes to create a new board with an 8088 and reassembled software (with very minor tweaks) that solved that problem in most cases by easily letting you split your code, data and stack into three separate segments of up to 64 KB each. I go into more technical detail on this in this Retrocomputing SE answer. The 16-byte segment offsets were really more of a nice hack that theoretically allowed use of a 1 MB address space while still allowing efficient use of memory (which was still expensive at the time) for such upgrades.

(Further discussion of either of these should probably go to either the Thoughts on address space extensions thread for general discussion or a new thread for discussions of specific schemes.)

Well, I’d say lower cost microprocessors; even at $150 or more, the microprocessors of the day were a significant cost reduction over anything else available. Nor would I say that the 6502 “trailblaze[d] the home microcomputer revolution”: that was clearly already under way. One of the U.S. 1977 Trinity used a Z80 and in some markets, such as Japan, the 6502 had little impact. (The thriving Japanese microcomputer market was almost exclusively Z80- and 6800/6809-based.) Even in the U.K., until the Commodore 64 really took over, the 6502 was used mostly in mid- to high-end microcomputers, with the Z80 used in the low end.