Well to start, there are many hundreds of dialects. You can find that many in source form on GitHub right now. If you wish to bookend the selections - dialects from the 80s, dialects for 8-bit, etc, I think the number would remain largely the same.

But…

I’m also interested in unusual but useful features of Basic dialects which might be good to adopt and might not be widely known. (Inbuilt assembler, complex numbers, string slicing, extended precision arithmetic…)

So I’ve been writing articles on BASICs on the wiki for some time now, and found many little differences that may be of interest. The key is where to draw the line. For instance, if memory is no limit then you could include them all, and effectively memory is no limit on a modern machine. If we instead limit ourselves to some sort of smaller set, say ones that might run on a 8-bit machine, then we could pick and choose. In any event:

Dartmouth’s later versions added string manipulation using the CHANGE command, like CHANGE A$ TO B. This would put the ASCII codes for each character in A$ into the array B. You can then convert back with CHANGE B TO A$. There’s nothing here you can’t do with a loop, but it’s interesting. Wang used the somewhat more obvious CONVERT command for this.

HP is the first I’ve found that used string slicing, although I cannot guarantee it’s the first. It was widely copied in other 1970s dialects, including Data General, North Star, Apple, Atari, and (later) Sinclair. String slicing has a very major performance upside because using slicing does not make a new string in memory, it simply makes a new pointer into the string. In contrast, LEFT$(A$,5) makes an entirely new string, copies 5 chars into it, and returns a pointer to that. This eats up memory as well.

HP, however, is unique in that it used square brackets, optionally, for defining limits. This has a significant potential advantage… In Atari, as an example, A$(1,10) makes a slice of 10 chars - but this syntax also means there is no way to define a string array! HP did not allow these either, A$(1,10) and A$[1,10] were identical internally, and could also be used for numerical arrays like B[1,10].

This represents a lost opportunity, because if you say square brackets are for slicing and round for arrays, then you could do A$(1,10)[1,10] which would return the first 10 characters of item 1,10 in the A$ array. So any new dialect I would STRONGLY recommend use this syntax.

HP and a few others optionally allowed # for “not equal”, which is actually kind of clever. Not terribly important though, although it might be useful for some porting efforts of very old code.

Some basics, like Tymeshare’s SUPER BASIC and DEC’s BASIC PLUS, offered post-statement tests like PRINT “IT IS FIVE” IF X=5, as opposed to IF X=5 THEN PRINT “IT IS FIVE”. I’m not sure if this is because they wanted to support JOSS-like syntax, of if its because the original IF did not allow statements, only GOTOs (Darmouth for instance, and many small computer versions).

Personally I don’t see a reason to use this in any modern dialect - it’s syntax is somewhat more obvious IMHO, “print this if…”, in the same way that “black cat” really does make more sense in the French fashion, “cat, black”. But it makes the parser seriously more difficult and likely the interpreter too.

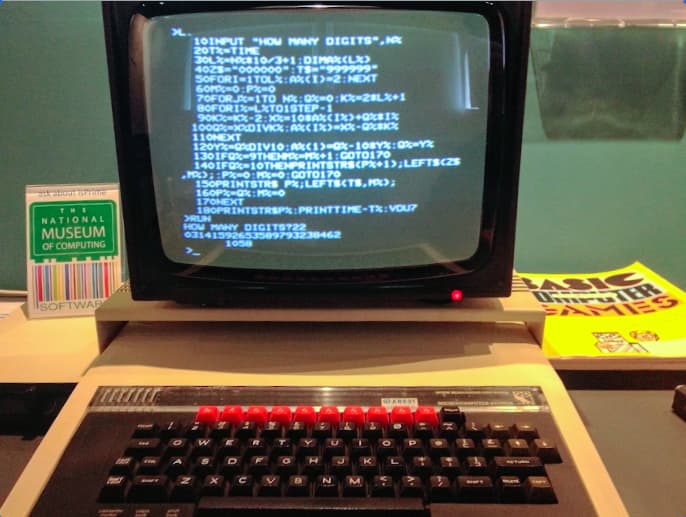

And internally there’s some decisions to be made as well. Curiously, after looking at all the mainline dialects, I concluded that Atari is the most suitable for advancement. It tokenized everything at edit time… number constants were converted to internal form, variable storage was set aside during edits and then replaced by a pointer to the value, etc. In theory, this should have allowed it to run circles around something like MS, which left much of the code in its original ASCII and re-converted at runtime, things like constants and variable names. Sadly, they wrapped this great parser in the world’s worst runtime, which made it one of the slowest BASICs of its era (only TI was slower). This is by no means unique to Atari, BASIC09 worked the same way, but it is the only mainstream example from the early 8-bit era I am aware of.

The concept of its system is clearly superior, easy enough to implement on 8-bit machines, and should be the model for future dialects. It’s how my basic works.

Finally, I strongly recommend that every dialect should have some sort of LABEL concept, which can be used as the target for GOTO and GOSUB. While some support this concept, and others (like Atari and Tiny) allow you to GOTO any arbitrary expression and thus A=500:GOTO A, they all ultimately call the normal line lookup on the resulting numeric value.

My idea is slightly different; any LABELs encountered work like a variable in Atari basic and immediately create an entry in a table of name:lineno:location. During parse time, branches followed by a valid label name use a separate token and, as with variables, the label name is replaced by a pointer to its storage in the table. When these are encountered at runtime, the pointer can be followed to the location value, if >1 then you immediately branch to that location, otherwise use the lineno to call your line lookup routine, put that into location, and continue as before. This lazy-evals these lines for super-fast lookup, and during edits you simply set location to -1 for any entry greater than the lowest line number changed. If you support PROCEDURE or similar structures, these would use the same mechanism, thus eliminating the line-lookup overhead for GOSUBs.

Of course, one can implement similar concepts for every line number, as was the case in BASIC XL for instance, or use various caches as in TURBO BASIC and others. But these all require more memory and more logic, and might not be suitable for small machines. Additionally, we can suggest that the author of the program might be aware of those locations that are often used, and make LABELs just for them, so the storage table might be quite small, perhaps 8 or 16 entries. It might be so small that you simply merge it into the variable storage as a “line number type”.