Rising to Ed’s challenger over here: B -- A Simple Interpreter Compiler - #2 by EdS

I thought I’d say something about BCPL …

It’s recorded that BCPL is the fore-runner to C, but the recent B thread seems to suggest that there was an intermediate step; B - probably initially written in BCPL, then in B itself. Whatever - The resurrected B is so close to BCPL as to make little difference, so who knows.

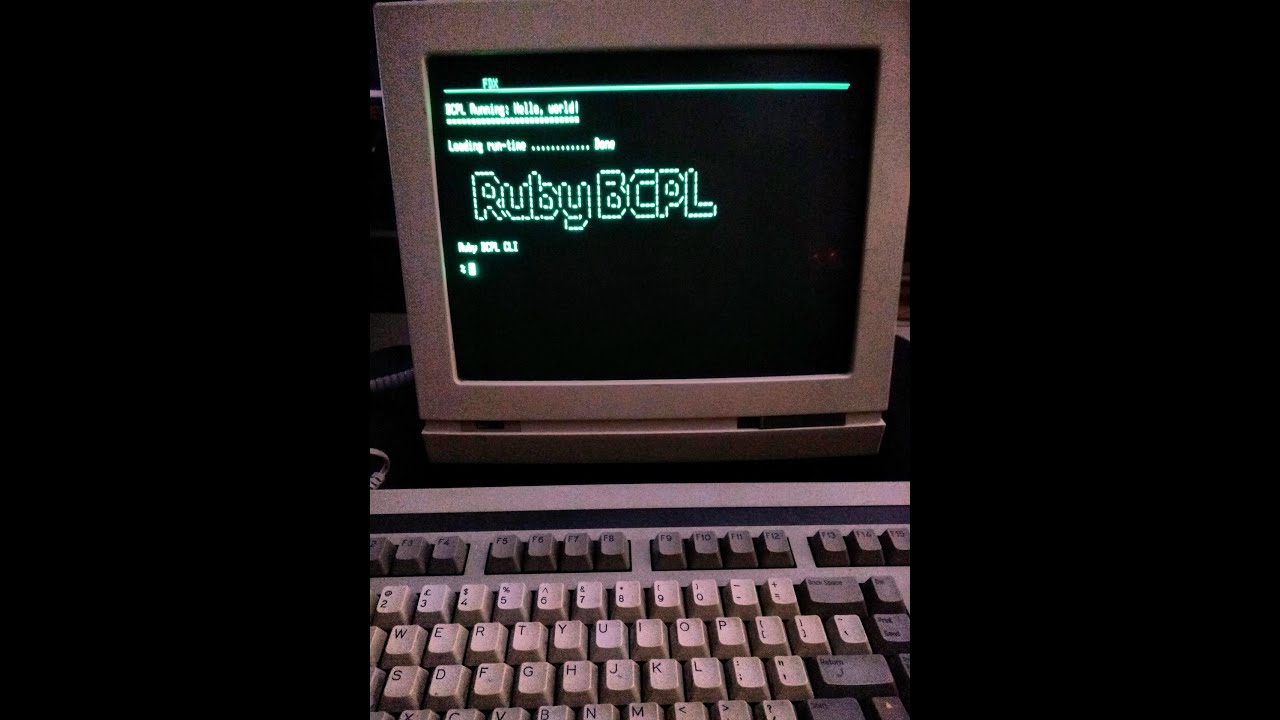

BCPL (Basic combined Programming Language) emerged in Cambridge in the mid 60’s but the first implementation was at MIT by Martin Richards the languages creator. Then (and now), it compiles into an intermediate code which is relatively efficient to interpret on different systems, although in recent years there is another intermediate code it can compile to which is easier to translate to native machine codes.

Think of BCPL as C without types. There is just one type: The Word. You can declare words, or vectors (arrays) of words. and you can word or byte address these vectors. initially a 16-bit system, today it’s a 32 (and 64) bit system, but still more or less compatible with the old 16-bit systems.

My experience is with the BBC Micro in the early 80’s - I wanted something a bit better than Basic (even though BBC Basic is the best old school 8-bit basic there is - I’d been doing a lot of C by then), so BCPL it was. It’s also about 3 times faster than Basic and compiles to a denser code than basic, so the programs could be a little bigger. My project was a flexible manufacturing system with independent/autonomous stations controlled by a BBC Micro using a network (Econet) for communications.

BCPL is still in-use today (a lot of Fords manufacturing systems in Europe is written in it apparently) and Martin Richards got the whole thing running on the Raspberry Pi a few years back in an effort to kindle interest. (I think the Python brigade won that battle though)

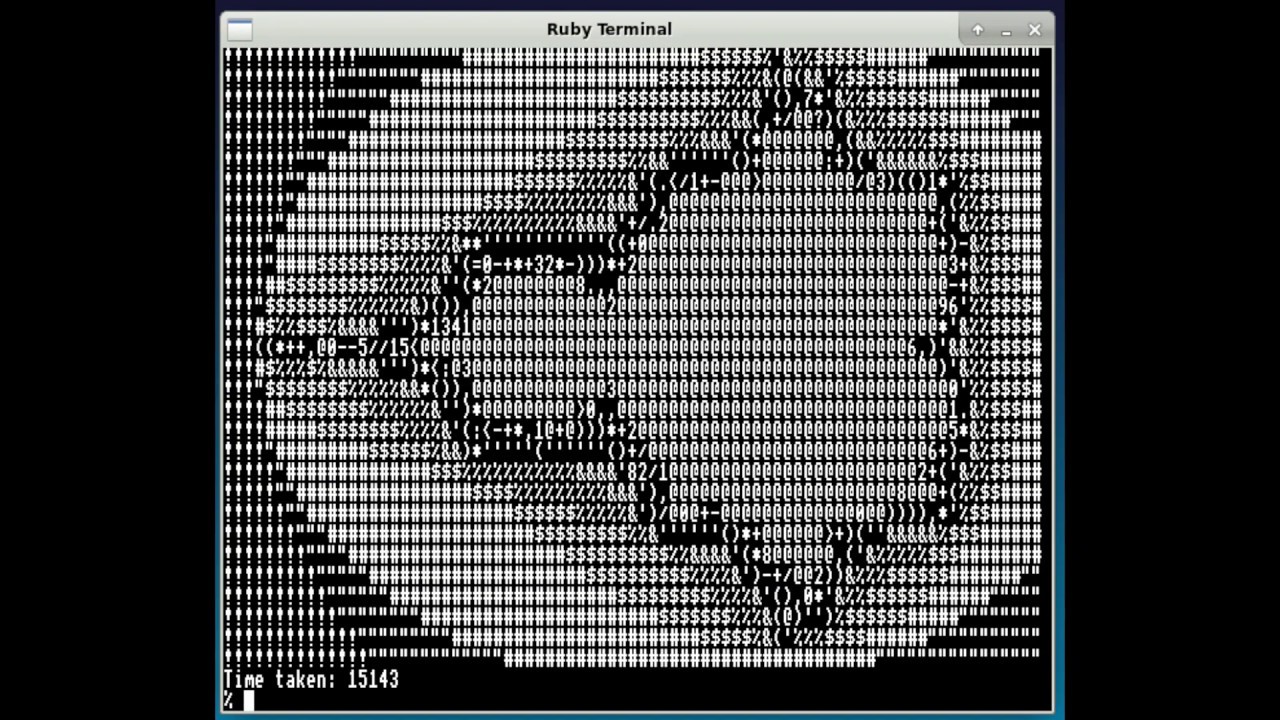

The compiler is of-course written in BCPL and takes a second or 3 to compile itself on my desktop i3 Linux system (Yes, really, 2-3 seconds). compare that to gcc, etc. …

Personally I feel it’s an ideal higher level language than Basic for those who want a bit more out of their 8-bit micro - it’s also very capable of being self-hosting. I can compile, edit and debug BCPL programs directly on a BBC Micro, or equivalent.

It was also used as the early OS on the Amiga - in the form of Tripos (Another Cambridge university project) which is a single user, cooperatively multitasking operating system

Current BCPL systems use 32 or 64 bit words - which poses a small problem with floating point… It was never really designed for floating point use - the BBC Micro implementation did come with the “calculations package” which used small vectors of 6 bytes to hold a FP number and library calls to do things like floating point add, multiply and so on, however the current versions do support floating point - but only as big as the underlying word, so if you want double precision, 64-bit numbers, then you need to run your code on a 64-bit (Linux) system…

Ever wonder where the byte and word indirection operators come from in BBC Basic? (rather than peek or poke) Well, that’s the product of the computing science and BCPL taught in Cambridge university as they’re right out of BCPL…

Hello world in BCPL:

get "libhdr"

let start() be

{

writes ("Hello, world*n")

}

Personally I think BCPL is an ideal language for lesser able systems - retro 8 (and maybe 16) bit computers. The compile can easily be self-hosting on a modest 32KB BBC Micro, the code efficient and compact, but on todays higher speed systems? C…

-Gordon