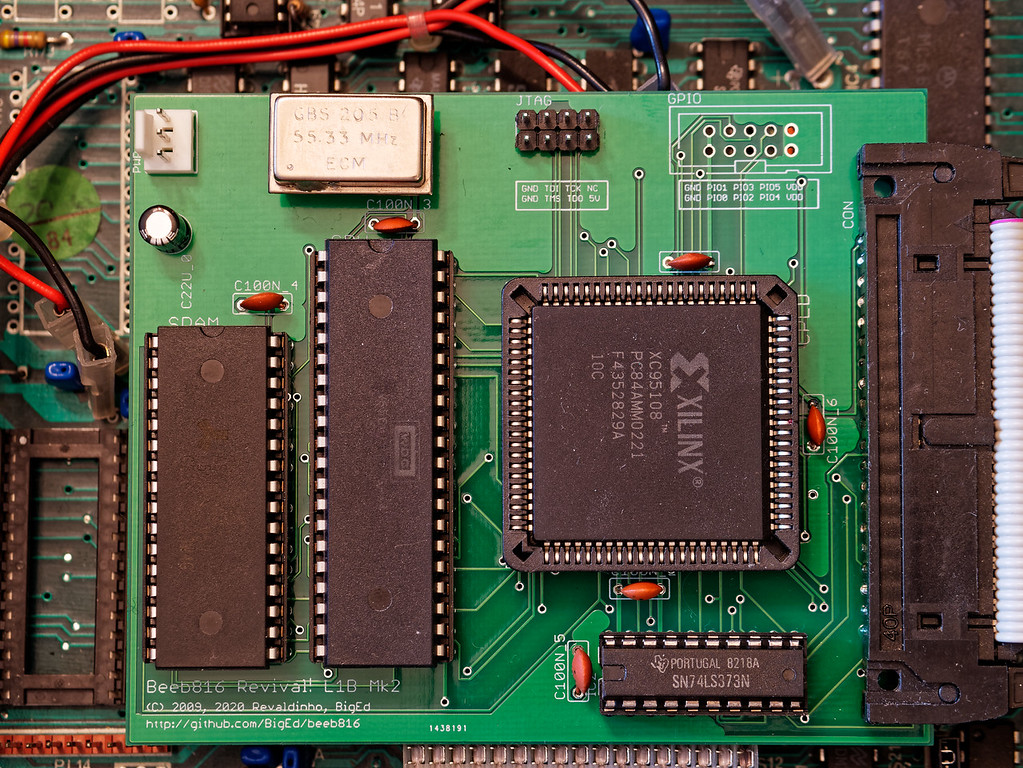

I managed to do the merest modicum of 65816 assembly programming today - pair-programming with @Revaldinho, updating the boot rom for the Beeb816 project, which accelerates a BBC Micro to 14MHz. A previous iteration looked like this:

That iteration could take a fast clock input on a flying lead, but we’re not doing that any more.

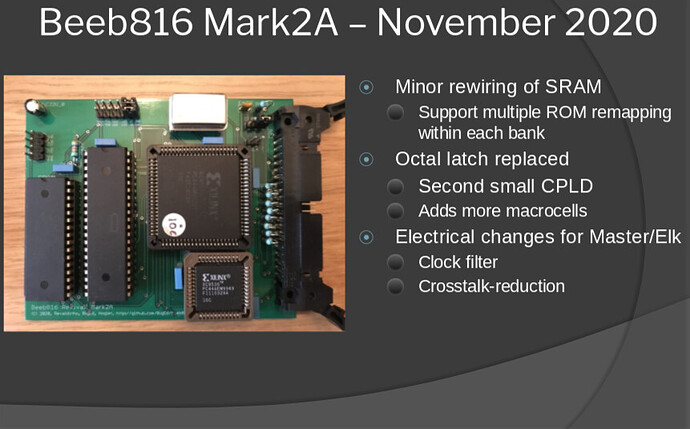

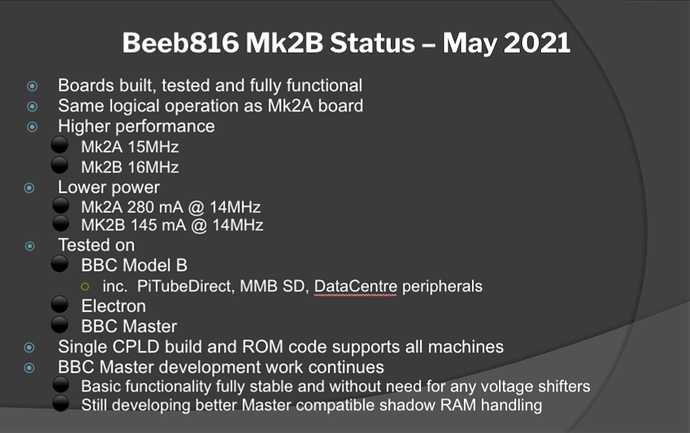

The project was started 10 years ago but has been on hiatus for about 4. We got it kind of working, and put it down, and more recently picked it up again and figured out the final pieces of the puzzle. We’ve also cooked up some ways to improve the offering.

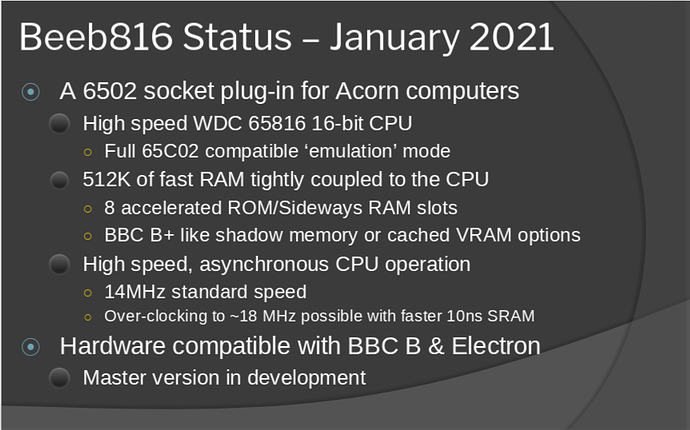

The idea - not entirely new - is to fit a fast CPU and fast local memory, with glue logic in a CPLD, on a board which goes into the original 6502’s socket.

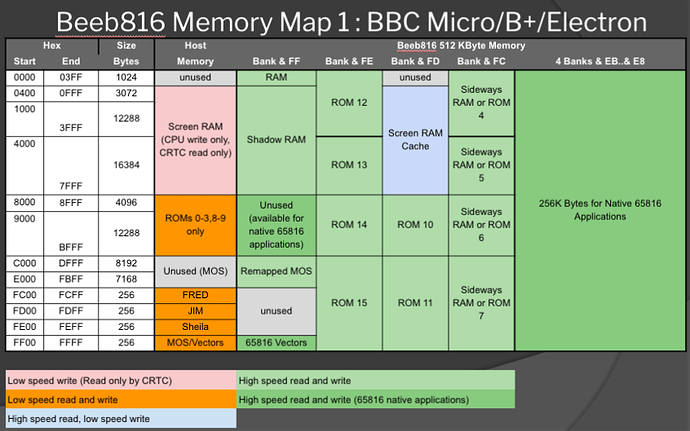

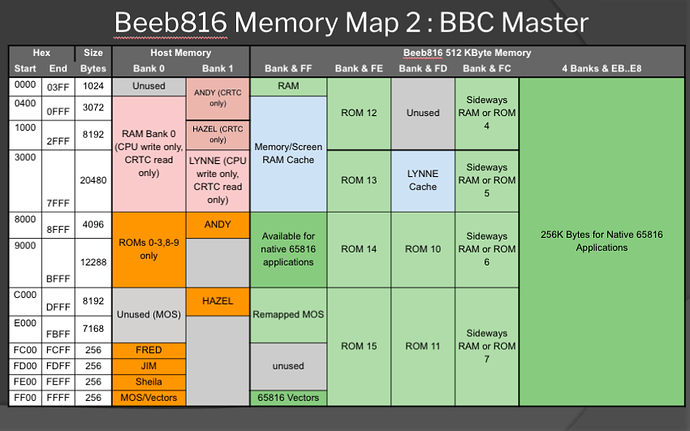

We picked a 65816 because it’s in production and it’s available in a convenient package. We thought it might be a nice extension to 6502 but we’re no longer sure of that. It has the advantage of having easy access to more than 64k of memory space, so we aim to fit the board with 512k: it can mirror the main RAM, the OS, up to 8 ROMs, shadow the video memory, and still offer some free banks for native '816 code.

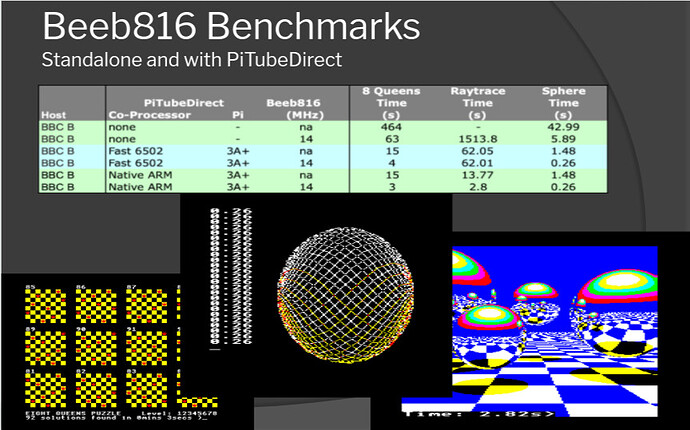

The Acorn scene presently has lots of modern add-ons and upgrades, one of which is PiTubeDirect, offering a 300MHz second 6502. Speeding up the Beeb itself is a nice extra win: a very fast second processor becomes limited by the host’s ability to write to screen. We’ve seen a 6x speedup in benchmarks.